The term “Hadoop Cluster” can have many meanings. In the most simple sense, it can refer to the core components: HDFS (Hadoop Distributed FileSystem), YARN (Resource scheduler), Tez, and batch MapReduce (MapReduce processing engines). In reality, it is much more.

Many other Apache big data tools are usually provided and resource-managed by YARN. It is not uncommon to have HBase (Hadoop column-oriented database), Spark (scalable language), Hive (Hadoop RDBS), Sqoop (database to HDFS tool), and Kafka (data pipelines). Some background processes, like Zookeeper, also provide a way to post cluster-wide resource information.

Every service is installed separately with unique configuration settings. Once installed, each service requires a daemon to be run on some or all of the cluster nodes. In addition, each service has its own raft of configuration files that must be kept in sync across the cluster nodes. For even the simplest installations, it is common to have over 100-200 files on a single server.

Once the configuration has been confirmed, start-up dependencies often require specific services to be started before others can work. For instance, HDFS must be started before many of the services will operate.

If this is starting to sound like an administrative nightmare, it is. These actions can be “scripted-up” at the command line level using tools like pdsh (parallel distributed shell). Still, even that approach gets tedious, and it is very unique for each installation.

The Hadoop vendors developed tools to aid in these efforts. Cloudera developed Cloudera Manager, and Hortonworks (now part of Cloudera) created open-source Apache Ambari. These tools provided GUI installation assistance (tool dependencies, configuration, etc.) and GUI monitoring and management interfaces for full clusters. The story gets a bit complicated from this point.

The back story

In the Hadoop world, there were three big players: Cloudera, Hortonworks, and MapR. Of the three, Hortonworks was often called the “Red Hat” of the Hadoop world. Many of their employees were big contributors to the open Apache Hadoop ecosystem. They also provided repositories where RPMs for the various services could be freely downloaded. Collectively, these packages were referred to as Hortonworks Data Platform or HDP.

As a primary author of the Ambari management tool, Hortonworks provided repositories used as an installation source for the cluster. The process requires some knowledge of your needs and Hadoop cluster configuration, but in general Ambari is very efficient in creating and managing an open Hadoop cluster (on-prem or in the cloud).

In the first part of 2019, Cloudera and Hortonworks merged. Around the same time, HPE purchased MapR.

Sometime in 2021, public access to HDP updates after version 3.1.4 was shut down, making HDP effectively closed source and requiring a paid subscription from Cloudera. One could still download these packages from the Apache website, but not in a form that could be used by Ambari.

Much to the dismay of many administrators, this restriction created an orphan ecosystem of open-source Ambari-based clusters and effectively froze these systems at HDP version 3.1.4.

The way forward

In April this year, the Ambari team released version 3.0.0 (release notes) with the following important news.

Apache Ambari 3.0.0 represents a significant milestone in the project’s development, bringing major improvements to cluster management capabilities, user experience, and platform support.

The biggest change is that Ambari now uses Apache Bigtop as its default packaging system. This means a more sustainable and community-driven approach to package management. If you’re a developer working with Hadoop components, this is going to make your life much easier!

The shift to Apache Bigtop removes the limitation of the Cloudera HDP subscription wall. The release notes also state, “The 2.7.x series has reached End-of-Life (EOL) and will no longer be maintained, as we no longer have access to the HDP packaging source code.” This choice makes sense because Cloudera ceased mainstream and limited support for HDP 3.1 on June 2, 2022.

The most recent release of BigTop (3.3.0) includes the following components that should be installable and manageable through Ambari.

- alluxio 2.9.3

- bigtop-groovy 2.5.4

- bigtop-jsvc 1.2.4

- bigtop-select 3.3.0

- bigtop-utils 3.3.0

- flink 1.16.2

- hadoop 3.3.6

- hbase 2.4.17

- hive 3.1.3

- kafka 2.8.2

- livy 0.8.0

- phoenix 5.1.3

- ranger 2.4.0

- solr 8.11.2

- spark 3.3.4

- tez 0.10.2

- zeppelin 0.11.0

- zookeeper 3.7.2

The release notes for Ambari also mention these additional services and components

- Alluxio Support: Added support for Alluxio distributed file system

- Ozone Support: Added Ozone as a file system service

- Livy Support: Added Livy as an individual service to the Ambari Bigtop Stack

- Ranger KMS Support: Added Ranger KMS support

- Ambari Infra Support: Added support for Ambari Infra in Ambari Server Bigtop Stack

- YARN Timeline Service V2: Added YARN Timeline Service V2 and Registrydns support

- DFSRouter Support: Added DFSRouter via ‘Actions Button’ in HDFS summary page

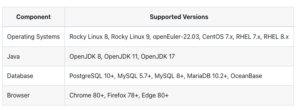

The release notes also provide this compatibility matrix, which covers a wide range of operating systems and the main processor architectures.

Back on the elephant

Now that Ambari is back on track, help managing open Hadoop ecosystem clusters can continue. Ambari’s installation and provisioning component manages all needed dependencies and creates a configuration based on the specific package selection. When running Apache big data clusters, there is a lot of information to manage, and tools like Ambari help administrators avoid many issues found in the complex interaction of tools, file systems, and workflow.

Finally, a special mention is in order to the Ambari community for the large amount required for this new release.

June 6, 2025

- Backblaze Helps IDC LA Cut Cloud Backup Costs by 75%

- Domo to Present at 2025 Data + AI Summit on Driving Real ROI with AI and Data Products

- Opsera Launches DevOps for DataOps Solution Built on Databricks

- Cloudera Joins AI-RAN Alliance to Drive Real-Time Data Innovation and AI-Native Telecommunications

- Thread AI Secures $20M Series A to Transform Enterprise AI Workflows

June 5, 2025

- Collibra Expands Data and AI Governance with Raito Acquisition and New Platform Capabilities

- Writer Named a Gartner Cool Vendor for AI Agent Development

- RavenDB Launches GenAI Capabilities Directly into the Operational Data Layer

- Dynatrace Integrates Agentic AI to Predict, Prevent, and Optimize at Scale

- PromptQL Partners with UC Berkeley to Develop Reliability Benchmarks for Enterprise AI

- Lightbits Launches Scalable Kubernetes Storage for AI and Block Workloads with AMD

- ThoughtSpot Launches Agentic Analytics Platform for Snowflake

- Incorta Connect Adds Snowflake Support for Real-Time ERP Data Access

- BigID Reports Majority of Enterprises Lack AI Risk Visibility in 2025

- Sigma Launches Native Semantic Layer Integration and AI SQL Capabilities on Snowflake AI Data Cloud

June 4, 2025

- RelationalAI Announces New Reasoning Capabilities for Sophisticated Intelligent Apps in Snowflake

- Coalesce Launches AI and Governance Features at Snowflake Summit 2025

- Ataccama ONE Available on Snowflake Marketplace, Integrates Document AI

- LandingAI Launches Agentic Document Extraction on Snowflake Marketplace

- RudderStack Launches Snowflake Streaming Integration for AI Data Cloud

- The GDPR: An Artificial Intelligence Killer?

- What Are Reasoning Models and Why You Should Care

- Fine-Tuning LLM Performance: How Knowledge Graphs Can Help Avoid Missteps

- It’s Snowflake Vs. Databricks in Dueling Big Data Conferences

- Snowflake Widens Analytics and AI Reach at Summit 25

- Informatica Goes All-In on AI Agents for Data Management

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- dbt Labs Cranks the Performance Dial with New Fusion Engine

- Cambridge Intelligence Delivers New Geospatial Visualization Kit, MapWeave

- Why Snowflake Bought Crunchy Data

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- It’s Official: Informatica Agrees to Be Bought by Salesforce for $8 Billion

- AI Agents To Drive Scientific Discovery Within a Year, Altman Predicts

- Who Is AI Inference Pipeline Builder Chalk?

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- IBM to Buy DataStax for Database, GenAI Capabilities

- Anaconda Simplifies Open Source Python Stack with AI Platform Launch

- Databricks Nabs Neon to Solve AI Database Bottleneck

- VAST Says It’s Built an Operating System for AI

- The Top Five Data Labeling Firms According to Everest Group

- More News In Brief…

- Astronomer Unveils New Capabilities in Astro to Streamline Enterprise Data Orchestration

- Yandex Releases World’s Largest Event Dataset for Advancing Recommender Systems

- Astronomer Introduces Astro Observe to Provide Unified Full-Stack Data Orchestration and Observability

- BigID Reports Majority of Enterprises Lack AI Risk Visibility in 2025

- MariaDB Expands Enterprise Platform with Galera Cluster Acquisition

- Databricks Announces Data Intelligence Platform for Communications

- FICO Announces New Strategic Collaboration Agreement with AWS

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- Databricks Announces 2025 Data + AI Summit Keynote Lineup and Data Intelligence Programming

- Cisco: Agentic AI Poised to Handle 68% of Customer Service by 2028

- More This Just In…

Sponsored Partner Content

-

Mainframe data: A powerful source for AI insights

-

CData recognized in the 2024 Gartner ® Magic Quadrant™ Report

-

Introducing AIStor, the most powerful version of MinIO to date

-

Designing a Copilot for Data Transformation

-

Get your Data AI Ready – Celebrate One Year of Deep Dish Data Virtual Series!

-

Supercharge Your Data Lake with Spark 3.3