Note: If you are a TL/DR type of person, let me give you the short answer to the title of the post: Yes! 🙂

For everyone else, I will try my hardest to keep this as short as possible. I will include as many pictures and CLI screens as I think are needed to help answer the scalability question, and no more. While I entertained the idea of making two separate posts regarding scalability, I felt it best to keep it to a single post since AP(Access Point) to AP communication and layer 3 roaming are best explained together. My wife and friends will tell you that I can be long-winded. I apologize in advance.

Let me just start by saying that I work for Aerohive Networks. I have been an employee of Aerohive for about 3 months. In that time, I have learned a tremendous amount about the overall Aerohive solution and architecture. Prior to working for Aerohive, I worked for a reseller that sold for Cisco(to include Meraki), Aruba, and Aerohive. I wasn’t unaware of Aerohive, but let’s be honest for a minute. Aerohive doesn’t have a lot of information out there around how their various protocols work. This isn’t unique to Aerohive, as plenty of vendors withhold deep technical information. It isn’t necessarily done on purpose. It just takes a lot of time and effort to get that information out there in a digestible format for customers and partners. The smaller the company, the harder that is to do. Lastly, there are plenty of people within IT that don’t really care how it works, just as long as it works.

My goal with this post is simple. I will attempt to expound on Aerohive’s scalability. This topic comes up in pre-sales discussions from time to time. I have not been an Aerohive employee long enough to have it come up more than a handful of times. I can tell you that in my former position at a reseller for multiple wireless vendors(Aerohive, Aruba, Cisco, Meraki), this topic DID come up with customers and internally among the folks within the reseller that I worked for. I suspect that in the months and years to come with me working in a pre-sales capacity at Aerohive, it will come up even more.

I’ll try to address the two biggest scalability issues. They are:

- AP to AP communications, to include associated client information

- Layer 3 roaming

Before I dive into that, a bit of initial work is needed for those unfamiliar with Aerohive and the concept of Cooperative Control. Through a set of proprietary protocols, APs talk to each other and exchange a variety of information about their current environment or settings, to include client information. For a basic overview of Cooperative Control and the protocols used within it, you can read this whitepaper on Aerohive’s website.

Got it? Good. Let’s tackle the first scalability “issue”.

AP to AP Communications

Imagine you have a building with multiple floors and about a thousand APs. You know that there are other wireless vendors with controller based systems that can support this amount of APs. You wonder how Aerohive would do the same thing with no central controller managing the RF environment and the associated clients. How can 1,000 APs manage to keep up with each other? Certainly, the controller that can handle 1,000 APs has sufficient processing power and memory to perform this task. No AP on the market would have similar processing power in a single AP. So how does Aerohive do it?

A few quick points:

- It is very likely that these 1,000 APs are not on the same local subnet from a management IP perspective. Maybe you divided up the APs in 2, 3 or 4 subnets for management purposes with the intent of keeping the BUM(broadcast, unknown unicast, and multicast) traffic down to a reasonable level.

- All 1,000 APs are not going to be within hearing distance(from an RF perspective) of each other. This is an important point to remember, so hang on to that one in the back of your mind.

The basis of AP to AP communications revolves around the Aerohive Mobility Routing Protocol, or AMRP. To take the definition from the whitepaper I linked to above:

AMRP (Aerohive Mobility Routing Protocol) – Provides HiveAPs with the ability to perform automatic neighbor discovery, MAC-layer best-path forwarding through a wireless mesh, dynamic and stateful rerouting of traffic in the event of a failure, and predictive identity information and key distribution to neighboring HiveAPs. This provides clients with fast/secure roaming capabilities between HiveAPs while maintaining their authentication state, encryption keys, firewall sessions, and QoS enforcement settings.

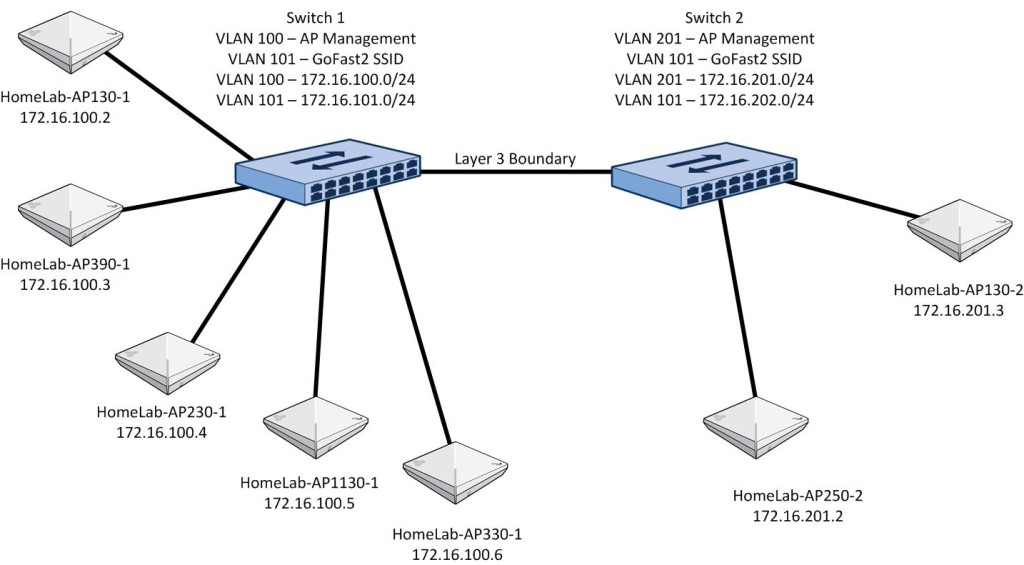

Since I don’t have 1,000 APs and associated PoE switches to build out my hypothetical multi-floor building, I am going to shrink it down to 2 switches and 7 APs. 5 of the APs will be on one switch and all APs on that switch will share the same management subnet and client VLANs. The other 2 APs will be on another switch and will use the same VLAN number for wireless client access, but that VLAN will have a different IP subnet than the switch with the 5 APs on it as well as a different AP management VLAN and IP subnet. The switches will also be separated with a layer 3 boundary. My lab environment looks like this:

The switches and APs will boot up and start talking to each other. I’ll deal with automatic channel and transmit power selection in another post. AMRP will ensure that all APs are talking to each other. Since I am dealing with 7 APs in relatively close proximity to each other, they will all hear each other at pretty good signal strength. I’ll drill down more into that RF range aspect shortly.

The switches and APs will boot up and start talking to each other. I’ll deal with automatic channel and transmit power selection in another post. AMRP will ensure that all APs are talking to each other. Since I am dealing with 7 APs in relatively close proximity to each other, they will all hear each other at pretty good signal strength. I’ll drill down more into that RF range aspect shortly.

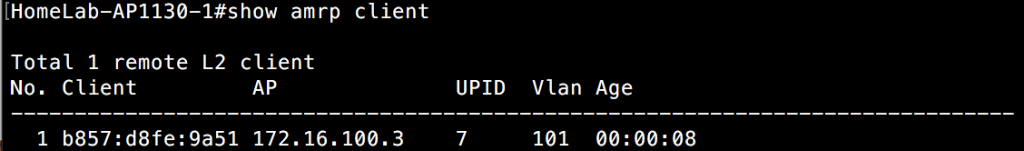

Let me connect to one of the 5 APs on switch 1. I can do this from either HiveManager, or by simply using SSH to connect to the AP locally. Alternatively, I could also use the local console port on the AP to grab this information. I am going to take a look at the neighboring APs from an AMRP perspective.

As you can see, this AP can see 4 neighboring APs. It doesn’t show the other 2 that reside on switch 2. The reason for this is due to the fact that those APs are on a different subnet from a management perspective. Even if it can hear the other APs over the air, they still don’t show up in the AMRP neighbor list. We’ll get to that when we cover layer 3 roaming.

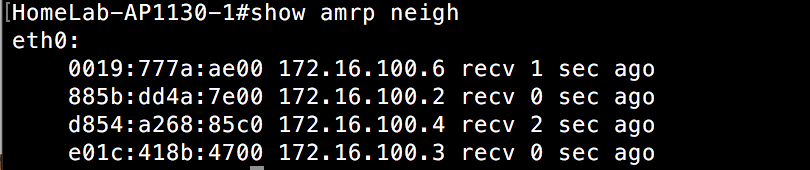

If I connect a client to the SSID(GoFast2) these APs are all advertising, I can now see that client in the “show amrp client” CLI output. It appears on all APs within the same local subnet. In the first image, I am connected to an AP on the same local subnet, but not the one the client is directly associated to.

In this second image, I am connected to the AP the client is directly associated to. You can see the output is different as it shows the interface the client is connected to and not the IP address of the AP like in the previous CLI output.

Just to clear up any misunderstanding, AMRP is NOT building tunnels between each AP for communications purposes. It is handled in a secure way, but it is not done via a tunnel. All connected client information will be shared among the APs on the same local management subnet via AMRP. That brings me to an important issue regarding scalability.

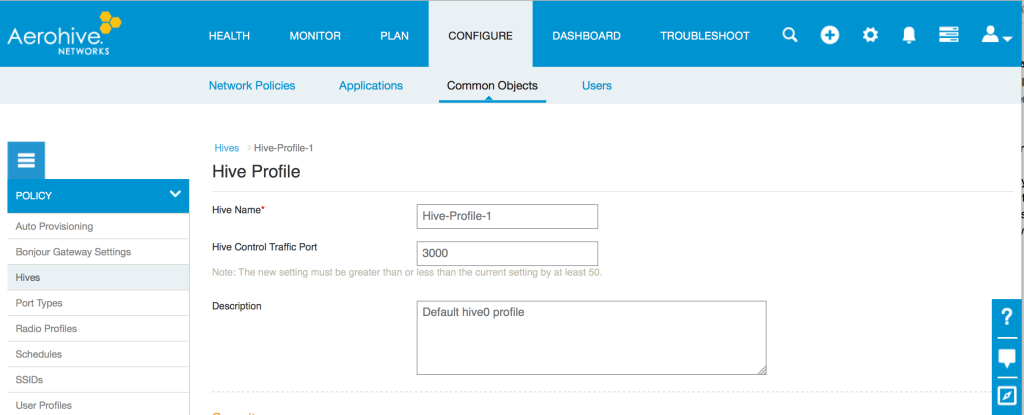

In order to increase scalability, Aerohive gives you the ability to ONLY share client information between APs that are within RF range of each other. By default, all APs on the same management subnet will share client information with each other. While this is perfectly fine in many environments, if there are a large number of APs on a given management subnet, you probably want to change that default setting and only have client session information sent to APs that the client can actually roam to. Keep in mind that restricting it to APs within RF range will also include any APs that a layer 3 roam could happen on. In Hive Manager NG, this is done in the following location:

Configure/Common Objects/Hives

Select the Hive containing the APs you want to change. At the bottom of the screen in the Client Roaming section, just uncheck the box labeled “Update hive members in the same subnet and VLAN.” Update the configuration on your APs and you will now remove client session sharing between APs not in radio range of each other.

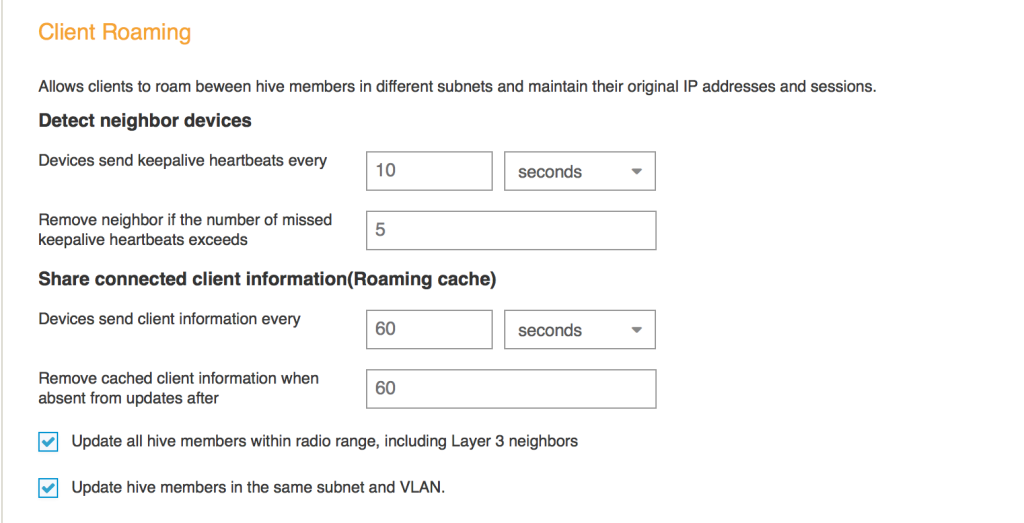

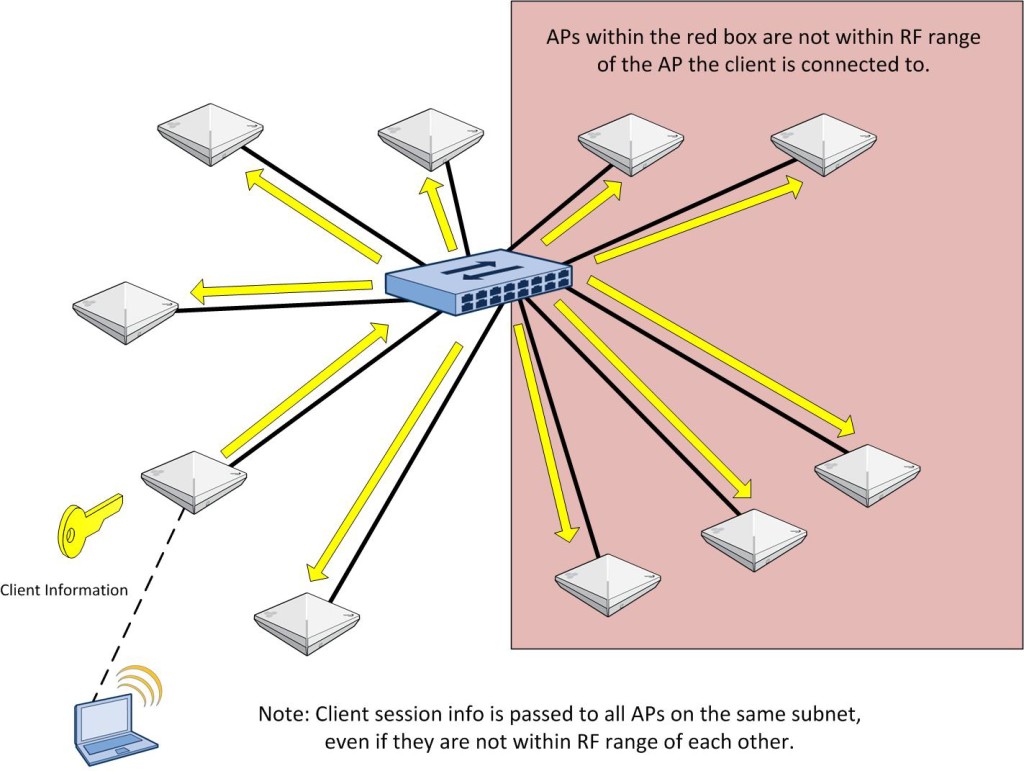

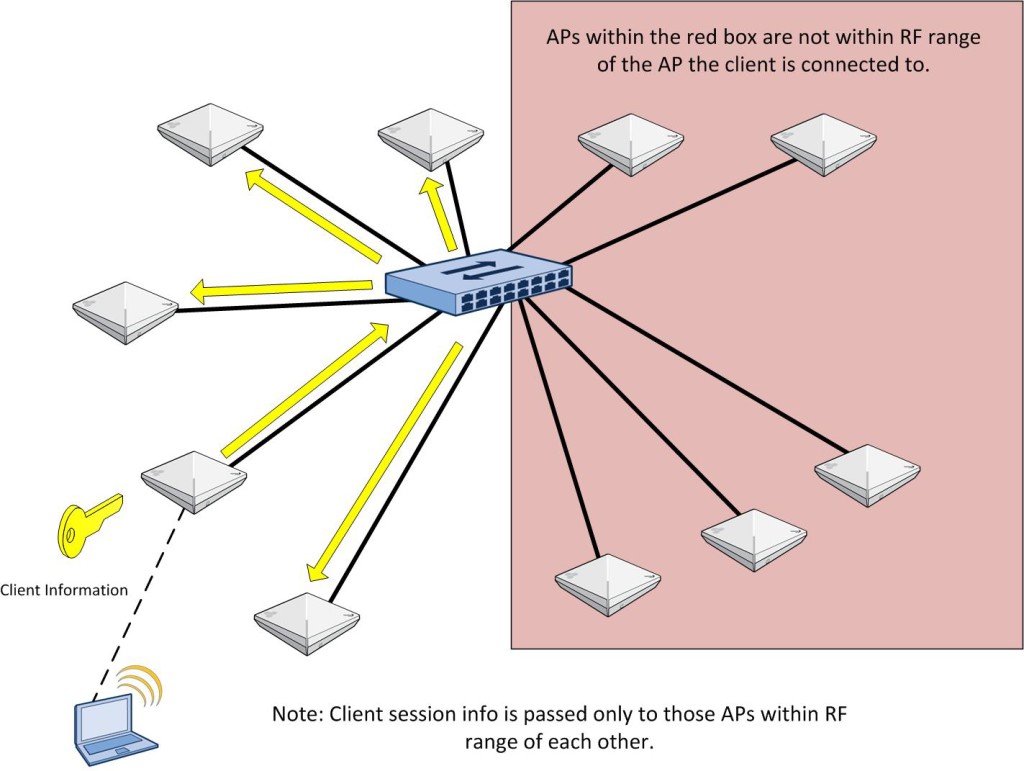

So now, instead of this:

You have this:

As a client roams from one AP to another, the AP it roams to will now share that client session with all of its APs within RF range. The AP that the client roamed off of will stop sharing that client info with APs that are in RF range of it since it no longer maintains the client session. The cycle repeats itself as the client roams to yet another AP. Using this method, the APs do not have to know about all client sessions on all APs within the same local management subnet. That allows Aerohive to scale out from a layer 2(and layer 3) roaming perspective.

Layer 3 Roaming

Layer 3 roaming is handled by another Aerohive protocol entitled Dynamic Network Extension Protocol, or DNXP. To take the definition from the whitepaper I linked to above:

DNXP (Dynamic Network Extension Protocol) – Dynamically creates tunnels on an as needed basis between HiveAPs in different subnets, giving clients the ability to seamlessly roam between subnets while preserving their IP address settings, authentication state, encryption keys, firewall sessions, and QoS enforcement settings.

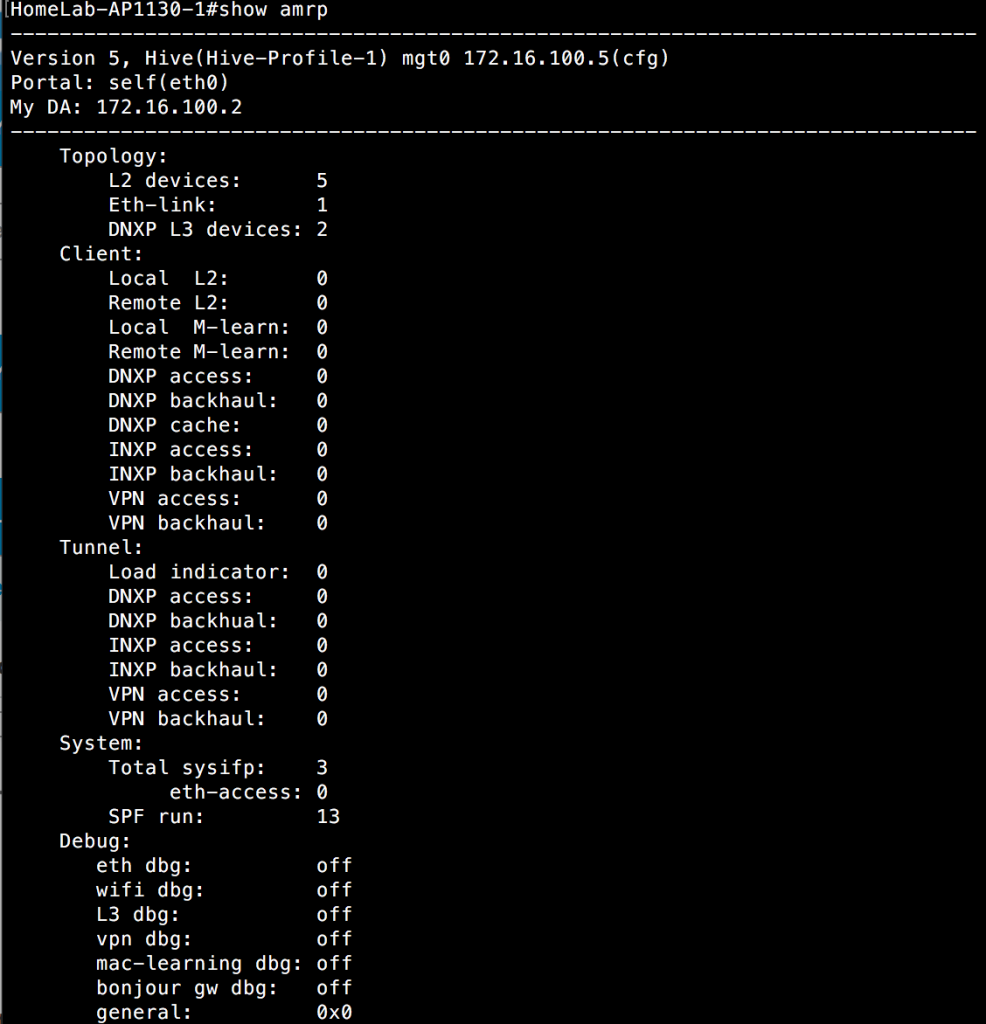

How it works is pretty interesting. To explain it, I will have to go back and talk about AMRP. On a given AP management subnet, AMRP takes after OSPF to a certain extent. If you are familiar with how OSPF works on a shared Ethernet segment, you know that there is this concept of a designated router or DR. Additionally, there is a backup designated router, or BDR. The DR and BDR exist to reduce the amount of OSPF traffic flowing between OSPF neighbors. The DR is responsible for sending updates to all the other routers to inform them of the network topology. If it fails, the BDR takes over. Using that same concept, Aerohive APs on a shared Ethernet segment(i.e. layer 2) elect a designated AP, or DA for short. They also elect a backup designated AP, or BDA. This can be seen by running the “show amrp” command on a given AP. Take a look at the following CLI output:

You can see here that the DA for the shared segment these APs are on is 172.16.100.2. The DA serves several different functions, but one of things it keeps track of is the load level on all the APs within a given segment. When it comes to layer 3 roaming, this DA has a pretty important job. It decides which AP on its given Ethernet segment will spin up the tunnel required for layer 3 roaming. Instead of just spinning up tunnels from the AP the client left during the course of its layer 3 roam, it will seek out the least loaded AP on the given Ethernet segment and have that AP setup the tunnel to the AP the client roamed to. This chosen AP establishes the DNXP tunnel to the AP that the client roamed to on a different subnet and ensures that the network knows that this now roamed client is reachable through this chosen AP.

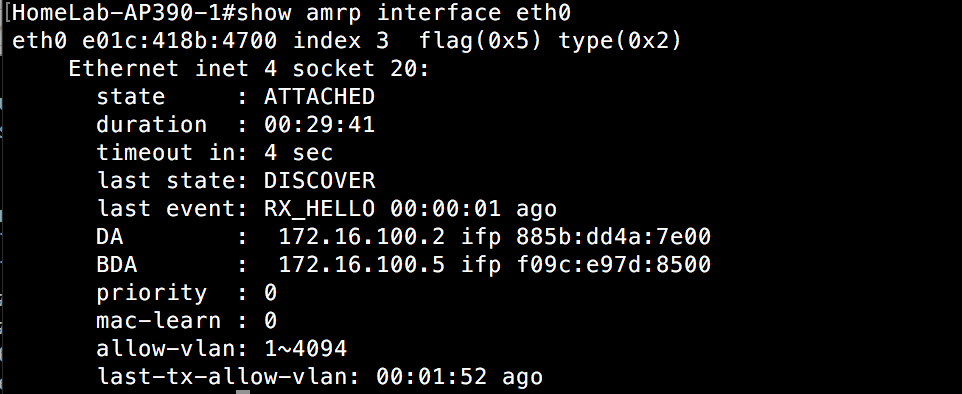

To see which APs are running as the DA or BDA, but without all the AMRP info from the previous command, you can use the “show amrp interface eth0” command, assuming your connection to the network from the AP is using eth0. It may be using a different interface.

I used the above AP, because it was not the DA or BDA. I wanted to show an AP that was in the “Attached” state. The APs that are not the DA or BDA will have a state of “Attached” in the same way that OSPF would show a “DROTHER” on a router that was not the DR or BDR.

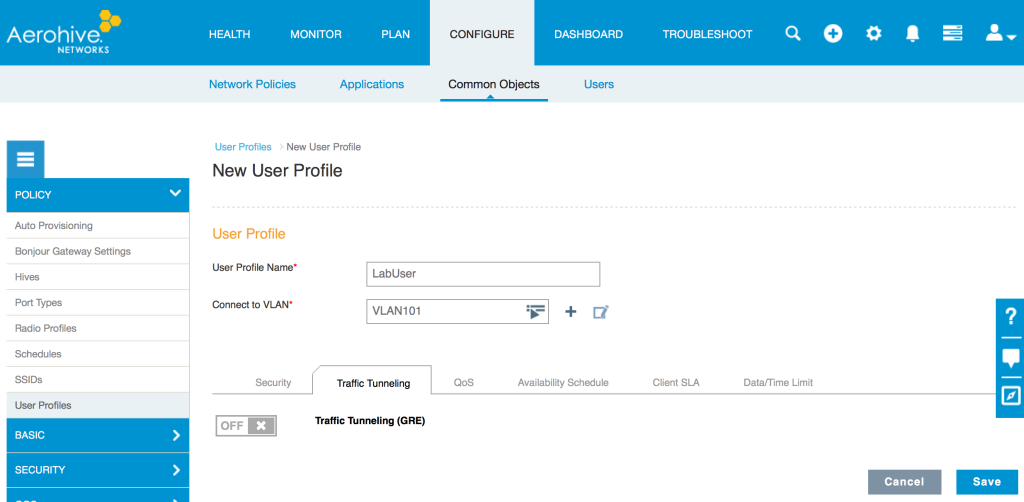

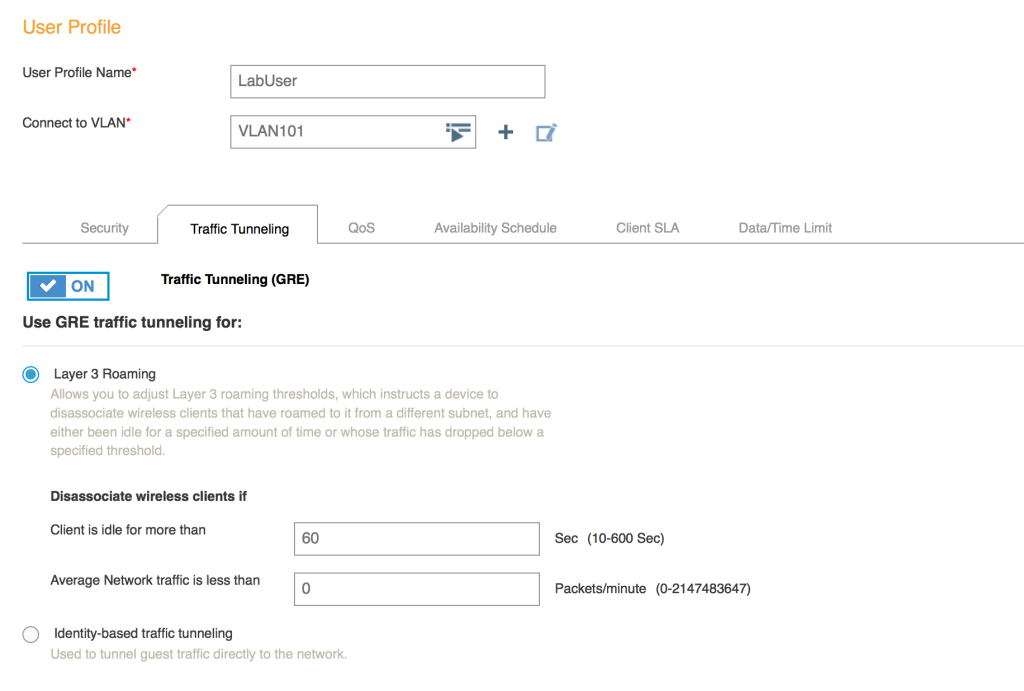

An additional thing to note with regard to layer 3 roaming, is that you can restrict which clients can perform a layer 3 roam. This is controlled within the user profile, and in a given SSID, I can have multiple profiles based on a number of different classification methods. Whether you want to allow or deny layer 3 roaming, Aerohive gives you the choice and at a fairly granular level.

To turn on layer 3 roaming for a particular user profile in Hive Manager NG, you simply modify the user profile.

Configure/Common Objects/User Profiles

Turn on layer 3 roaming via the Traffic Tunneling tab and if needed, adjust the idle timeout/traffic threshold values. Save the user profile and update the applicable APs.

Layer 3 Roaming Test

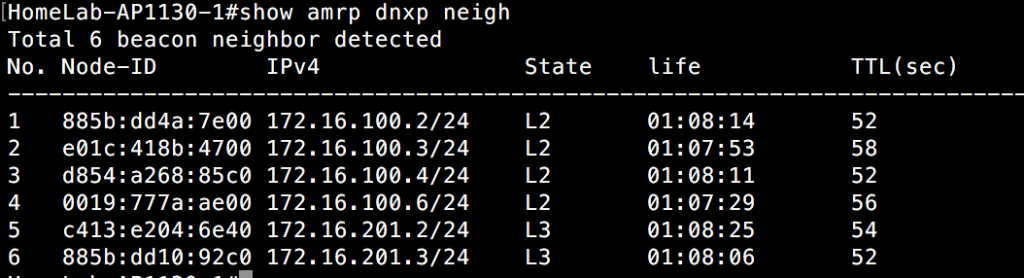

Although AMRP only shows neighbors on the same Ethernet segment, DNXP is aware of other APs that are nearby, but use a different subnet for the AP’s management IP. You can see these APs with the “show amrp dnxp neighbor” command as shown below:

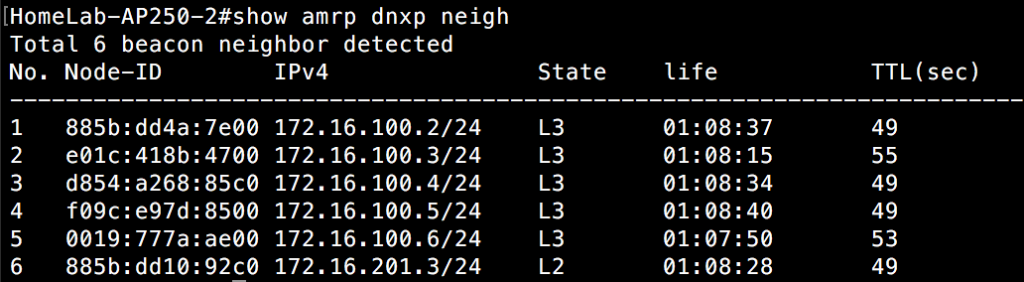

Notice that 4 of the APs are in an L2 state. These are the APs on the same Ethernet segment(switch 1) for the management IP. The other 2 APs are in the L3 state. The AP knows that clients could roam to these layer 3 neighbors. In order for this to work, the APs need to be a member of the same “hive”, which is a fancy name for a shared administrative domain. Think of it like an autonomous system number that you would see in routing protocols like BGP. These APs within the same hive share a common secret key, which allows them to communicate securely with each other and trade client state and other AP configuration information. If I look at an AP that resides on switch 2(separated from switch 1 via layer 3), the opposite information appears, with 1 AP in an L2 state, and 5 APs in an L3 state.

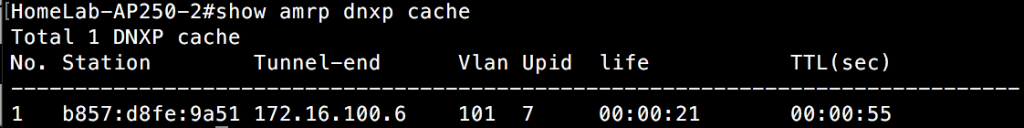

I still have my client connected from the previous example discussing AMRP. It is connected to the AP 390 on switch 1. The 2 APs on switch 2 are separated by a layer 3 boundary from the other 5 APs, so is it aware of the client connected to the AP 390 device? Yes. Since all APs are within RF range of each other, client information is passed between them all. However, in the case of clients separated by a layer 3 boundary, DNXP comes into play. You can see these client sessions in another subnet by looking at the DNXP cache.

Note that although this client has not performed a layer 3 roam, the CLI output tells you where the tunnel is going to originate from across the layer 3 boundary when it does roam. This is because the designated AP(DA) has already determined which AP is the least loaded on the subnet that the client will roam from and has assigned that least loaded AP with tunneling duties if the client needs to make a layer 3 roam.

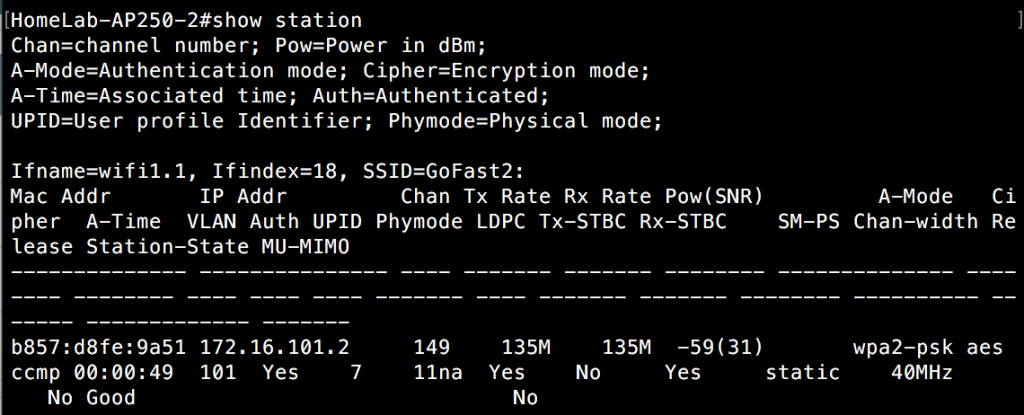

I have moved the client closer to one of the APs on switch 2 so that it roams. I had to actually move the AP out of my office and into the hallway and moved the client into the next room so that the RSSI value on the AP it is currently connected to would be low enough for it to actually roam. As you can see in the CLI output below, the client has roamed over to the AP that resides on switch 2.

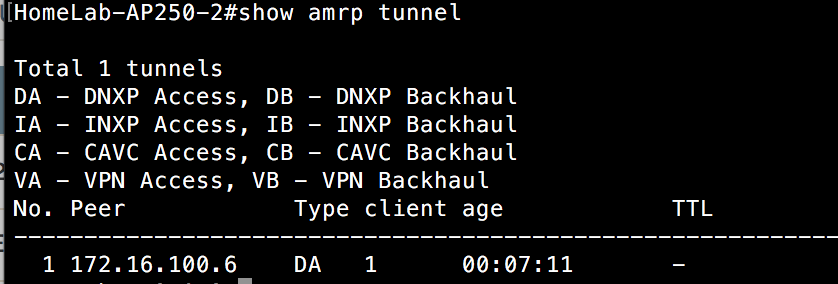

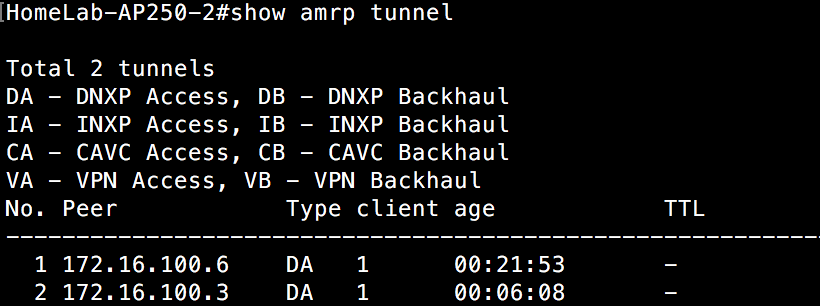

Now, I should be able to see a tunnel spun up between this AP 250 on switch 2 and the AP that the DA connected to switch 1 chose(172.16.100.6).

Here it is from the perspective of the AP the client roamed to on switch 2:

Here it is from the perspective of the AP(172.16.100.6) on switch 1 that was responsible for building the tunnel to connect the two subnets:

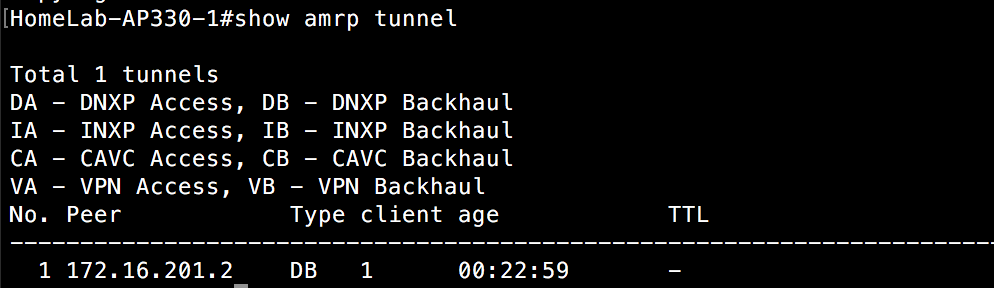

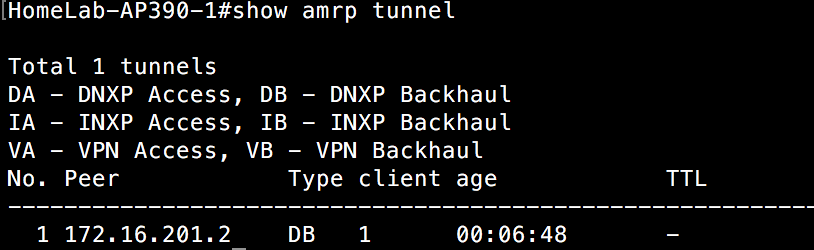

Other APs on switch 1 are also aware of this tunnel:

Let’s go one step further and add another client. I associate a client to an AP on the switch 1 side. For the purposes of maintaining some brevity, I won’t show all the CLI around that client before it roams. I moved the client into the same room as the first client and it associates to the AP 250 on switch 2. We can now take a look at how the layer 3 roam was constructed for this second client.

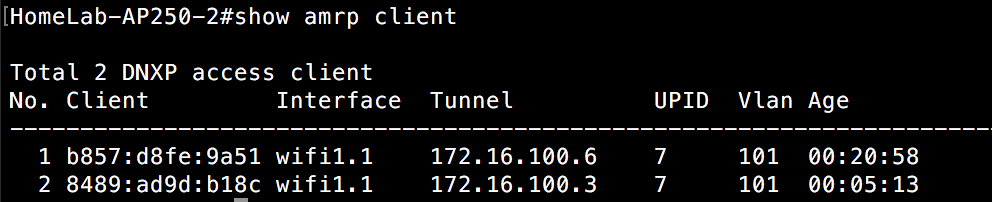

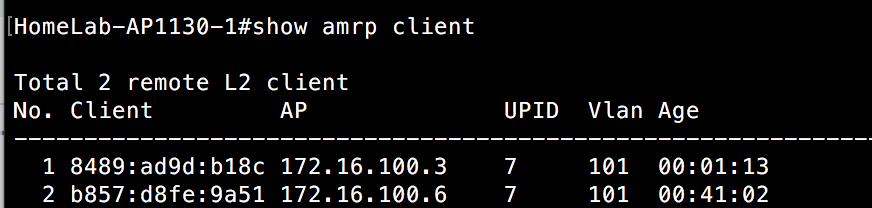

We can see above that both clients are attached to this AP via a layer 3 roam. This can be verified additionally with a “show amrp tunnel” command.

If we take a look at an AP on the network(switch 1) that these clients roamed from, we see that these APs are aware of the clients and how to reach them via the AP that setup the tunnel to the other side.

Here is that view from one of the APs on switch 1:

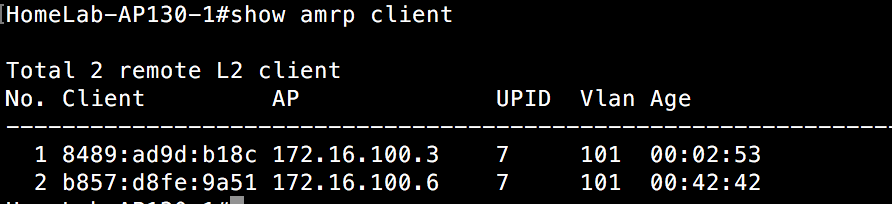

And the view from another AP on switch 1:

There is one thing in particular I want you to notice. Both clients have executed a layer 3 roam and have tunnels spun up bridging these two separate subnets. However, notice that the tunnel end point on the switch 1 side is different for each client. That is because the DA told one AP to setup a tunnel for layer 3 roaming purposes for the first client, but told a different AP to setup a layer 3 roaming tunnel for the other client. Remember that the DA is aware of each AP’s load on a given subnet it is responsible for. It is spreading the load(no pun intended) among the various APs to keep from overloading any single AP. If there were a lot of clients on my home lab network and several of them performed a layer 3 roam at once, you might see tunnels originating from even more APs. It is this distribution of tunnel origination that allows layer 3 roaming to scale and not overload one particular AP.

Closing Thoughts

If you made it this far, congratulations. I told you this was going to be a long post. If nothing else, I hope I have shed a little more light on how Aerohive can scale when it comes to APs cooperating with each other and with regard to layer 3 roaming. As I mentioned earlier in the post, I did not cover automatic channel and transmit power selection. That is covered by a different protocol(ACSP) that works in conjunction with AMRP, and I hope to write another post soon showing how that works.

As with any solution, there are limits. Whether a controller based wireless network, or a cooperative control environment like Aerohive’s, at some point you can break it. It would be impossible for me to acquire the number of clients and access points to do this on my own. Not to mention the fact that I would need a decent sized building to spread out the APs enough to where they aren’t able to hear each other. I hope I was able to at least demonstrate the scalability, albeit on a smaller scale.

Let me know your thoughts or if you have any additional questions in the comments section below.

When the L3 roam is created between two APs on different Subnets is the connection more or less a GRE tunnel between the APs as long as the client is sending traffic? Once the client stops sending traffic during the L3 roam does the AP then force the client to reassociate on the new subnet the new AP is configured for?

Chris,

The tunnel will stay up based on the idle settings and average network traffic settings. If either of those thresholds are violated, the client will be disconnected. By default, the network traffic threshold is set to 0, so it isn’t really considering that. However, it does have the idle setting at 60 seconds by default, so after a minute of no activity, the client will be disassociated. It should then associate to the same SSID, but it will get a new IP address.