Abstract

Google has now established itself as a mainstream language reference tool. This paper reports findings of a survey of non-native speakers of English concerning the patterns of their use of Google as a reference tool in EFL writing, and discusses implications of these findings on user assistance in terms of user guidance and software support. In comparison with the previous surveys, this survey is unique in having been conducted in a completely non-controlled setting on respondents more representative of the target population. The survey found a strong predominance of Google over the other reference tools and of ‘open search’ over ‘closed search’. This calls for the understanding of the Web as a new and unique reference tool rather than just a large corpus.

Similar content being viewed by others

Introduction

Google has now established itself as a mainstream language reference tool. A survey I have recently conducted on the reference practice of non-native speakers of English in EFL writing (to be called ER-survey) has shown that Google is by far the most frequently used and most trusted of all available reference tools in EFL writing (see Table 7). This is consistent with the findings of other researchers that learners strongly prefer Google over English corpora (Conroy 2010, pp. 867, 874f, 878; Geiller 2014, p. 28f; Sha 2010, p. 390; Pérez-Paredes et al. 2011, p. 247; Pérez-Paredes et al. 2012). Indeed, ELT researchers are paying increasing attention to Google as a language learning tool, many using the term GALL (Google-assisted language learning) and many affirming its positive impact on L2 writing improvement (Panah et al. 2013; Bhatia and Ritchie 2009, p. 547; Conroy 2010; Chinnery 2008; Geiller 2014; Wu et al. 2009; Boulton 2012).

The main aim of this paper is to report findings of the ER-survey focusing on patterns of Google use as a reference tool in EFL writing. It pursues the following questions:

-

(i)

How often do learners use Google to improve their writing vis-à-vis other reference tools?

-

(ii)

What types of reference queries and searches do they make on Google?

-

(iii)

How do they use Google, how successful are they, and what difficulties do they face?

-

(iv)

What do the answers to the above questions tell us about helping users be more successful in their linguistic use of Google, in terms of user guidance and software support?

There have been several surveys on the linguistic use of Google. ER-survey is unique, however, in that whereas the previous surveys were conducted in a controlled setting, where some training, instruction, and/or software toolset were provided before the survey, ER-survey was completely non-controlled, taking the respondents untouched in their own, natural setting. Furthermore, whereas most of these surveys had a small number of university students as respondents, ER-survey is more representative of the target population, with a more diversified and larger number of respondents (73). Finally, whereas most of the previous surveys had Google merely as one of the reference tools, ER-survey almost exclusively focused on Google and asked more detailed questions about its use.

The non-controlled nature of the survey helped discover a strong predominance of Google over the other reference tools and of ‘open search’ over ‘closed search’. The predominance of open search is new to the currently prevailing understanding of “the Web as corpus” and calls for a new approach to user guidance and support in the linguistic use of Google.

Search types and query types

Google searches can be divided into three types as shown in Table 1:

The closed search only retrieves instances that exactly match the input (allowing some formal variants such as different tense forms and number forms), while the open search retrieves diverse forms of instances, including exact matches of the input, that contain some or all of the input words (including their formal variants and synonyms) in different orders [see “pattern searches” in (Pérez-Paredes et al. 2012, p. 10f)]. The wildcard search puts a wildcard inside a phrase enclosed by quotation marks, and retrieves instances that contain different texts as wildcard matches.

When the input is a single word, however, the type classification is tricky. A single-word search with quotation marks only retrieves exact matches and no variants, while without them it retrieves exact matches plus formal variants just like the closed search. Now, single-word searches are always performed without quotation marks. These single-word searches can be regarded as closed searches because their results are just as exact as closed searches, but also as open searches because they are less exact than singe-word searches with quotation marks.

Eu (submitted) defines the encoding query as a query that arises in the process of EFL writing/speaking as a quest for an L2 expression, and provides a detailed discussion of their nature and types. In this paper the term query will mean encoding query. Queries differ from searches in that searches are functions that can be used for different purposes, and queries use a search to find an L2 expression in the manner specific to their type (Yoon 2014, pp. 54f, 79, 92f, 208f; 2016, p. 216). Query types are established by the search input used and the aim of the query. Table 2 shows query types and the search type(s) they use.

To explain the inputs, candidate means a candidate for the answer, which is the correct L2 expression sought by the query. Just like candidates, a keyword set is an approximation of the answer; unlike candidates, however, the approximation by a keyword set is incomplete (see example below). A node is a known and fixed part of the answer that is used to find the unknown part of the answer. On the other hand, query aim is used in Yoon’s (2014, 2016) query classification. Here a verification query seeks to verify existing candidates, and an elicitation query seeks to find candidates. An elicitation/verification query both seeks to verify candidates and find new candidates in a single search.

Hence, one makes a candidate query (V) when she has one or more candidates in mind and wants to verify them before choosing one of them as the answer. Candidate query (V) enters each candidate in a closed search, which provides the frequency and greatest number of instances for each candidate. For instance, Geiller (2014) reports a student verifying the candidates it depends on and it depends of by Googling each in quotation marks.

A candidate query (EV) both verifies an existing candidate and looks for new candidates in a single search. This is made possible by the open search, which retrieves instances of the search input and its diverse variants. For instance, Googling economical war without quotation marks retrieves economical war and economic war (Geiller 2014). The fact that the instances of economic war outnumber those of economical war discredits the former as a valid candidate and presents the latter as a better candidate. Thus, when one has some candidates in mind, she does a candidate query (V) when she is highly confident that one of them is the answer, and a candidate query (EV) when she has no such confidence.

To illustrate the keyword query, Geiller (2014, p. 35f) reports that a student entered the keyword set Iraq scandals detention in open search and found the sentence “Rumors of Iraq prison abuse scandals started to emerge”, which he took as a candidate for the sentence he intended to write. As for node queries, a closed search of the node it’s as easy as retrieves its posterior collocates such as pie, ABC, and falling off a log (Geiller 2014), and a wildcard search of the node a * deal of retrieves results such as a great deal of and a good deal of (Yoon 2014). Now a combination of the node and a collocate can be taken as a candidate and brought to a candidate query (V) if necessary.

Previous surveys

Overview

This section reviews recent surveys on the use of reference tools including Google, focusing on the parts that concern Google. They pursue some or all of the four questions listed in Introduction. Table 3 provides a summary. Column 2 specifies the number, vocation, and L1 of the respondents. Column 3 outlines the survey process including pre-survey training, which shows different types and degrees of control. Column 4 indicates the search types taught and/or supported in the survey. Column 5 summarizes the findings based on the four questions. The success rates (number of correct answers over number of queries made) ranged from 52 to 70%.

Surveys

In FG survey search engine was used only in 6% of the queries made, much less than bilingual resources (55%), English dictionaries (21%), and English corpora (9%). FG lists three query types and the number of Google queries made in each type:

-

(1)

Confirming a Hunch………………6

-

(2)

Choosing the Best Alternative…..0

-

(3)

Finding a Suitable Collocate……2

(1) is a candidate query (V) with a single candidate, which she calls a “hunch” (Frankenberg-Garcia 2005, p. 342); (2) a candidate query (V) with multiple candidates; and (3) a node query. FG does not discuss specific problems brought to Google except reporting one pitfall in search engine use:

The problems that occurred when the students carried out look-ups using search engines were all caused by an uncritical acceptance of the results retrieved. For example, one student looked up climatic change in Google, found it, and accepted it regardless of provenance… the target term in the translation was… climate change (Frankenberg-Garcia 2005, p. 349; see also Wallwork 2011, pp. 261–263).

The student did a candidate query (V) with the single candidate climatic change, and her failure shows that one should be careful with this query; it is always safer to check alternatives. Had she done a candidate query (EV) and entered the phrase in open search, she would have found the correct expression climate change.

The Geiller survey was entirely dedicated to Google use. In an effort to ensure the standard quality of the data source, it required the respondents to use a Google Custom Search that restricted the search base to 28 trustworthy websites. It provides examples of all four query types while prominently featuring keyword queries. It found that a majority of the respondents successfully used Google to improve their writing (52%), felt comfortable with the use of Google search options (81%), viewed Google as a good way to correct their errors and improve their English (81%), and intended to use it in the future for linguistic purposes (56%; 31% for undecided). Specifically, they reported difficulties in formulating “queries” (search inputs) and identifying alternatives to search for, and in processing and applying the results to their writing. Three respondents felt “overwhelmed with the results and simply did not know what to make of them” (Ibid, p. 37). Three others “sometimes found it tedious” to use Google “while they had other, more effective tools at their disposal (grammar handbooks, dictionaries, etc.)”.

The Yoon Survey is a PhD thesis devoted to the investigation of the use of general reference tools. The respondents were encouraged to use a software called I-Conc, which conveniently brought together concordancers (COCA, Google, JustTheWord) and dictionaries (Naver bilingual dictionary, LDCE, thesaurus.com). I-Conc also provided access to Google Custom Search (customized for each respondent with the sites specifically relevant to their work) and Google Scholar. Google, including GCS and GS, ranked third in the frequency of use, after Naver bilingual resource (25.6%) and COCA (25.4%) (Yoon 2014, pp. 83–84).

About query types, echoing the trend in FG survey, 92.5% of the Google queries in Yoon Survey were verification queries, a large majority of which being single-candidate. Accordingly, open search occupied a small proportion—only 5.4% of the Google queries (Yoon 2014, p. 56: see “sentence/phrases hunting”). Overall in Yoon survey open search figures only marginally. He mentions it only briefly (Yoon 2014, pp. 42, 56), and the training content for Google only includes closed searches (Yoon 2014, p. 206).

Yoon (2014, p. 92f) discusses “query strategies”, which concern “formulating or refining problem definition/representation and/or query term”, and “evaluation/application strategies”, which concern choosing the answer based on the result and adapting it to one’s writing. According to him, the most common query strategy was to obtain a candidate through an elicitation query and then put that candidate through a candidate query (V). The most common example of this was “getting a hint from Bilingual [dictionary] for an L2 form to express the intended meaning and then performing an English query on COCA or Google for its acceptability or frequency”. Another common example was to get a suggestion from COCA and then go to Google for more instances and confirmation. For instance, Yumee found discussion was deep by entering in COCA “discussion [vb*] [j*]” and then took it to Google. Furthermore, when the initial search was not helpful, some respondents revised the search input, making it more general or more specific, or “replacing a constituent word or structure with an alternative”. On the other hand, the most common evaluation strategy was choosing the candidate with the highest frequency.

Yoon (2014, p. 98) also discusses pitfalls, first of which is to choose the “most frequent item from the results without examining its possible meaning differences from less frequent alternatives or whether it was actually used in the context that they intended”. Another is the wrong choice of query type and overuse of single-candidate candidate queries (V), a problem also reported by FG above. For instance, Yumee Googled the phrase made its identity in closed search to check if make is a good collocate of identity, and accepted it based on its high frequency. However, Yoon notes that a node query in COCA or JustTheWord would have shown her better collocates such as establish, create, develop, and forge. The respondents evaluated Google by listing “quick and easy resource for verification” as a pro and “too much informal, non-standard data” as a con.

ER-survey: quantitative findings

Overview and background

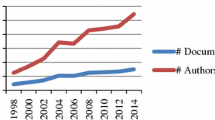

ER-survey was conducted over the first 5 months of 2015 targeting the non-native speakers of English who regularly write in English. It was distributed for unpaid participation through my human, online, and institutional networks, and received 73 completed responses. 30 respondents accepted follow-up discussions, which helped resolve discrepancies in their surveys and deepened my understanding of individual users’ reference behavior.

The declared purpose of the survey was “to understand how you currently check and correct your English” towards building a software that optimizes Google for language reference. The survey has three sections. Section 1 “Background” requests relevant personal details including the type and amount of the English writing the respondent does. Section 2 “Your English-check process” first asks about “English problems” the respondent encounters while writing (types, frequency of occurrence, difficulty, etc.) and then about her current practice and experience in dealing with these problems, focusing on the use of Google. In Sect. 3 “Case report” the respondent can optionally provide details of an English problem she has dealt with. 28 case reports were received with varying degrees of completion and sincerity.

Tables 4, 5, and 6 show some relevant demographics of the respondents. The respondent type was determined based on the main types of English writing she did and her job. The casual writer’s main type of writing, for example, is personal communication such as emails and SNS postings. The respondents had a fair degree of mix, though with a clear dominance of academic writers and Korean and Chinese mother tongues.

As for the English level, most of the respondents were bilingual or advanced, and there were no elementary-level respondents. This seems to indicate that Google use is an activity that is appropriate to the advanced-level, and most of the Google users in language reference are advanced learners.

Reference process and reference tools

The survey found that on average, the respondents spend 21.4% of their English writing time on dealing with English problems, while getting a satisfactory answer in 72.5% of the problems they deal with (self-assessed). The survey asked about the frequency of use and helpfulness of reference tools in resolving English problems, and the respondents rated them as shown in Table 7, showing a strong preference for and trust in Google (In the survey, the order of presentation of the reference tools was random):

The top rating for Google in helpfulness shows that its frequent use is not simply due to its popularity but grounded on its performance. For “other tools”, respondents wrote the following: Google Translate (3), grammar books (2), academic text (for reference) (1), antonym dictionary (1), online dictionary (e.g. wordreference) (1), Linguee (1), “Googling the phrase” (1), and “Use word in a sentence” (1).

The prominence of Google is consistent with the findings of Conroy survey and other researchers (Conroy 2010, pp. 867, 874f, 878; Geiller 2014, p. 28f; Hafner and Candlin 2007; Sha 2010, p. 390; Sun 2007), while contradicting those of FG survey and Yoon survey. The latter surveys, however, had strong factors favoring other tools. In FG survey: (i) the task was a translation project, which necessitates a heavy use of bilingual resources; (ii) the respondents, as translation students, most likely had been taught to use dictionaries and corpora as standard (Sun 2007, pp. 337, 352), and the author acknowledges that their preference may have been “influenced by the three paper dictionaries recommended in their course bibliography” (Sun 2007, pp. 339; see also 345, 353); and (iii) the survey was done 12 years ago (the resources dated 18/11/2003) (Sun 2007, p. 339), when users were much less aware of search engines as a reference tool, and search engines had much less to offer. Indeed, in FG survey a serious underuse of Google is indicated by the limited query types.

In Yoon survey the prominence of the Naver bilingual resource is largely due to Naver being the most popular portal site in Korea. Yoon notes that Naver provides an especially useful Korean-English parallel corpus. About COCA, Yoon (2014, p. 156) says:

However, this frequent use of COCA by the respondents may not be an accurate indicator of their actual preferences. COCA was one of the new reference resources introduced to them, and they were encouraged to use as often as possible, so a novelty effect and also their awareness of being observed through screen recordings may have affected their choices of this resource.

In sum, the prominence of Naver bilingual resource and emphasis on the corpus and closed search during the training must have led to the respondents’ underuse of Google, especially the open search.

ER-survey sought to identify difficulties the respondents faced in Google use. Table 8 shows that 70% of the respondents experience difficulty formulating their search input, and Table 9 shows various factors that hinder a successful linguistic use of Google.

Types of Google use

The survey then asked a series of questions concerning the use of Google. Based on early responses and follow-up discussions the following types of Google use were finalized:

-

DU-CS (direct use—closed search): E (exclusive), H (heavy), L (light)

-

DU-OS (direct use-open search): E (exclusive), H (heavy), L (light)

-

DU-WO (direct use-word search only)

-

IU (indirect use: forums and blogs)

-

NU (no Google use)/UNC (unclear)

In DU (direct use) users work out the answer to their English problem directly from the search result (frequencies and examples); in IU (indirect use) they visit forums and blogs that address problems similar to theirs, and Google only works as a channel to these sites. DU and IU are not complementary but may well be found in the same user. DU-CS is E, H, and L respectively when one does closed search exclusively (i.e., no open search), very often, and only sometimes; and the same for DU-OS. Since closed and open searches are complementary, DU-CS/E, DU-CS/H, DU-CS/L, and no DU-CS entails respectively no DU-OS, DU-OS/L, DU-OS/H, and DU-OS/E. Here, the wildcard search was ignored because only 4 respondents used wildcard (ticked answer 12-6 below), one from DU-CS/E and three from DU-CS/H, and even for those who use it the wildcard search usually represents a marginal aspect of Google use.

Finally, DU-WO represents a small number of cases where the user only searches for single words and no phrases. Hence, DU-WO is complementary to both DU-CS and DU-OS, while DU-CS and DU-OS always include word search. The category of DU-WO was created because some respondents said they only searched for single words, and, as explained in section Search types and query types, single-word searches can be classified as closed or open.

Now, for each respondent use type(s) were selected according to her answer to the following questions:

12. How do you use the search engine? (select all that apply)

-

1.

I don’t use it.

-

2.

I check the number of results displayed at the top of the page.

-

3.

I compare the numbers of results from different searches.

-

4.

I look for examples that are same as or similar to my search phrase.

-

5.

I read examples of my search phrase to understand its meaning.

-

6.

I use the wild card (*) to get word/phrase suggestions.

-

7.

I look for English forums or blogs addressing problems similar to mine.

13. When entering your search phrase, do you put quotation marks (“ “) around it?

-

1.

Yes, always.

-

2.

Yes, very often, but not always.

-

3.

Yes, but only sometimes.

-

4.

Never.

Answers 12-2, 12-3 exclusively apply to DU-CS and DU-WO because it is only when all results (nearly) exactly match the search input that frequency is meaningful. On the other hand, answer 12-4 describes the typical process of DU-OS, where a user scans examples of diverse forms to find relevant ones, which are similar to or same as her search phrase. Answer 13-1 does not mean putting quotations on a single-word input.

All respondents who ticked 12-1 were assigned to NU, and all who ticked 12-7 to IU. Then, regardless of 12-7, if any of the 12-2 ~ 12-6 was/were ticked, which means DU, one of the DU types was selected as follows:

-

1.

13-1 → DU-CS/E.

-

2.

13-2 → DU-CS/H and DU-OS/L.

-

3.

13-3 → DU-CS/L and DU-OS/H.

-

4.

13-4 → DU-OS/E (if 12-4 is ticked) or DU-WO (if 12-4 is not ticked).

In 4, ticking 13-4 indicates DU-OS/E or DU-WO. Then, due to the close association between DU-OS and 12-4, ticking 12-4 most likely indicates DU-OS/E, and not ticking it indicates DU-WO, an understanding I reached in a follow-up discussion.

In fact, however, the survey did not start with the use types and questions in the form shown above; rather, further understanding gained through early survey responses necessitated their revision. Unfortunately, as a result, a large number of respondents missed the final version of questions 12 and 13, although many of them were kind enough to return and re-answer them. This resulted in a substantial number of respondents with UNC.

Tables 10 and 11 present the results:

Within DU-CS and DU-OS (those who search for multi-word phrases as well as single words in direct use), those who always or very often use quotations marks (DU-CS/E + DU-CS/H) can be called ‘closed search users’ and those who never or only sometimes use them (DU-OS/E + DU-OS/H) ‘open search users’. Table 12 shows that the latter outnumber the former by almost 4:1.

In fact, this prominence of open search is no surprise because it just shows that learners understand Google largely as an information source, where most of the searches are open, and they use Google linguistically largely in the same way they look for, say, travel information. Its prominence, however, may be a surprise to the current discourse on GALL, predominated by the understanding of “the Web as corpus” (Kilgarriff and Grefenstette 2003; Renouf and Kehoe 2013; Robb 2003; Boulton 2013; Shei 2008), because open search is unknown to corpora. Indeed, in the previous, academically controlled surveys open search appeared only in Yoon and Geiller surveys, and only marginally in Yoon survey.

ER-survey: case reports

Overview

Among the 28 case reports received 15 were discarded for the following reasons: 1 only used Google Translate, 5 were indirect uses, 3 made no or only marginal use of Google, 2 were unclear about the tools used, and 4 were simply bad, providing seriously incomplete and incoherent information. The remaining 13 cases are summarized and discussed here (Table 13). Their types are broken down as follows:

The ratio of open searches to closed searches falls far below 4:1, and this is due to the high proportion of candidate queries (V), which most likely resulted from the problem that nearly all examples of English problems the survey provided to the respondents were candidate queries (V), a slip due to my lack of awareness of the open search at the beginning of the survey. On the other hand, the mismatch between the search types and query types in the number of respondents indicates an overuse of the open search, which is explained below. The success rate was lower than those observed in the previous surveys: 46%.

Google reports two different frequencies in a search: a huge one on the first page and a much smaller one on the last page. The second frequency represents the actual number of examples retrieved by the engine, while the first frequency is extrapolated from the second through some complicated algorithm (Sullivan, 2005; Veronis, 2005). All frequencies used in this paper are actual frequencies.

Candidate queries (V)

Table 14 presents, for each case of candidate query (V), the candidates as embedded in the respondents’ sentence, search inputs she entered (separated by a line break or slash), answer she chose, search type, and success mark (see notes below the table). The search inputs may be the same as the candidate or adjusted for better examples and recall.

Respondent 380 provides a clear account of the query process. He cites examples (1) and says: “These examples showed me that check English problem merely finds out what the problem is, while deal with English problem tries to solve the problem”:

(1) | a. | The easiest way to check the problem on your end is to see if it’s either a problem with the battery itself or the charger. |

b. | If you’re in debt and you are finding it hard to cope, it’s important to deal with the problem straight away. |

Since one normally spends time on English problems not to find out what the problem is but to solve it, the choice of deal with as answer seems correct.

Respondent 35 also provides an excellent account of her choice of advance to:

If I type in ‘advance to’ on Google, it brings up an idioms dictionary and also some phrases like ‘failed to advance to the top three’ and ‘advance to the semi-finals’, which tells me that if I want to convey the idea of something reaching a higher value or level, this is the idiom I need to use. Compared to that, when I type in ‘advance into’, most results are war and military related articles (‘an army advances into another region’) which tells me maybe this isn’t the right choice of word.

But she used open searches to check out the candidates. Although she still obtained enough of their examples, two closed searches would have provided cleaner and richer results with frequencies favoring to. Furthermore, a node query with “to advance * a higher state” gives the following frequencies (counted by author):

(2) | a. | to advance to a higher state = 36 |

b. | to advance into a higher state = 3 |

These results would have made the decision process easier for her.

Respondent 307 did not explain how the search result led him to the correct answer although he marked it as “very helpful”. His search inputs were too short to be helpful; they should have included the matrix verb changed as the basis of comparison, as in (3):

(3) | a. | “has changed while I was” = 191 results. |

b. | “has changed while I had been” = 1 result. |

Respondent 352 rated Google as “unhelpful” although he used it first, while rating an online grammar checker and grammar book as “helpful”. But the reason Google did not help was that his search inputs were too short and picked up too much noise; longer inputs would have given him a clear answer with a large frequency gap:

(4) | a. | “Before analyzing the data” = 293 results. |

b. | “Before analyzed the data” = 15 results. |

He did use the longer phrases, but only with the grammar checker, which suggested (4a). Then the grammar book helped him understand the reason. I advised him about using longer phrases from the beginning with Google, whereupon he replied: “Sure, this would decrease my time needed to answer my questions. I’d raise my ratings for such a search engine”.

The problem of short search input continues in case 322. The respondent says that he picked the answer by checking the frequencies of the inputs and “very quickly” scanning their examples—the phrases with take outscored those with bring in extrapolated frequency. Unfortunately, however, the quick scan did not help him see the difference in meaning between the two candidates; the search inputs were too short to bring out the difference clearly, especially with an inundation of noise such as “take guests hostage”. Based on his comment that the target sentence was an “email response to an invitation to a home party” I coined the inputs “take guests to the party” and “bring guests to the party” and obtained examples like the following:

(5) | a1. | A fleet of privately hired London buses take guests to the Party. |

a2. | Yellow Cabs will be available to take guests to the party. | |

b1. | Francis made only one request of his employees; they were not allowed to bring guests to the party. | |

b2. | You can bring guests to the party for an additional $15 per guest. |

These examples show that take guests is used when it is only the guests who go to the party, and bring guests when the guests go to the party together with the subject. Since the respondent was going to the party, he should have chosen bring guests.

Respondents 447 and 49 used open searches for a candidate query (V) and failed to get helpful information. With 447 the search results had almost no example of on the job market or in the job market. She then went to the bilingual corpus site Linguee for further examples, and chose on the job market, declaring partial certainty. However, a closed search of longer phrases generates the following result:

(6) | a. | “many opportunities in the job market” = 40 results. |

b. | “many opportunities on the job market” = 5 results. |

Reading some of these phrases will show that (a) and (b) do not differ in meaning as in the case of (5); thus, she can choose (a), the one with a higher frequency. I explained this to her, and she replied as follows:

Thanks for the tip with longer sentences in quotation marks. I actually didn’t think of that. Based on those results I would take number one, sounds like a really good method to use in the future.

On the other hand, case 49 had too long a search input as well as the wrong search type. The respondent explained in the follow-up discussion that he had entered the entire target sentence in open search and then its various simplified versions. This brought no useful examples, and he could not choose the answer. Then I suggested using the phrases (7) in closed search:

(7) | a. | “I asked him if he still thinks” = 19. |

b. | “I asked him if he still thought” = 59. |

The two phrases express the same, and so the one with a higher frequency can be chosen as the answer. When I explained this to him he said he had not known about this method.

Case 506 is a single-candidate candidate query (V), for the querier tried to get information about one candidate with closed searches. But I tried his searches and got zero or no useful examples. He did not explain how he obtained the answer except saying “Changing the way of expression” for “Other tools” used, which suggests that he simply used his own judgment. Considering his uncertainty with the candidate and negative search result, he could have tried the candidate query (EV). Entering a simplified phrase tell me as it is confirmed without quotation marks returns the following results:

(8) | a. | If you were to tellme as soon as I made this thread… |

b. | And you’ll tell me as soon as it’s confirmed?’ |

This shows the correct candidate and answer: as soon as it is confirmed. A problem, however, is that these examples show up after hundreds of results, making it nearly impossible to find them through manual scanning. Cases like this require a software support that extracts only the helpful examples like the above (see Eu, submitted).

Candidate queries (EV)

Table 15 shows the candidates as a phrase, a phrase in a sentence, or a whole sentence themselves.

Respondent 191 had a successful query. She says she made the query because the phrase having nearly 100% urbanization “does not sound natural”. An open search of this phrase “shows that the form of ‘urbanised’ is used often”. Indeed, the search result includes (9):

(9) | a. | How would life evolve on an over urbanized earth? |

b. | Nearly 60 percent of urbanized San Jose has been paved over. |

Her judgment makes sense because although the result includes many examples of urbanization, it is not used to describe a place as urbanized. She then confirmed the use of urbanized in a candidate query (V) with a dictionary. Before choosing the phrase as the answer, however, she could have done another candidate query (V). For example, a wildcard search of “being nearly * urbanized” returns (10).

-

(10)

Singapore, being nearly 100% urbanized, is very susceptible to the Urban Heat Island effect.

This is a candidate query (V) rather than a node query because here the wildcard only replaces a number, making the same candidate more general rather than getting alternative candidates. Case 354 is another successful one, where an open search of double-pointed sword returned an overwhelming number of double-edged sword, which established the latter as a clear answer.

By contrast, the rest of the cases were unsuccessful. Respondent 325 says Google search did not provide an answer. But entering he is very pushing generates the Google autocomplete suggestion he is very pushy, which is the answer, and it is not clear why he missed it. 265 is another case where the reason for the failure is unclear. An open search of develop the argument retrieves a result filled with this phrase and with no alternatives, which confirms it as a strong candidate. Then a candidate query (V) with the phrase “develop * argument from” retrieves (11), whose close similarity to his target sentence confirms the candidate as the answer:

-

(11)

The authors develop this argument from a critical discussion of contributions by Karl Polanyi and Robert Heilbroner.

Respondent 17, uncomfortable with his candidate sentence, first searched for the whole sentence and then a simplified one by dropping “new bank”. Seeing several occurrences of the phrase following information in the result as in (12), he formulated his answer by restructuring the candidate with this phrase.

-

(12)

Use the following information about a customer’s margin account to answer the question.

However, his answer seems a bit awkward. Further down the result there are examples that show better candidates and answers, but again they are hundreds of results away. Hence, this is another case that urgently calls for software support (see Eu, submitted).

User assistance

The findings of the ER-survey, as well as the authors of the previous surveys, speak to a strong need for user training and support in GALL (Conroy, 2010, p. 879; Geiller, 2014, p. 39; Yoon, 2014, p. 174; Zengin & Kaçar, 2015, p. 332). Especially, in ER-survey, only 7% of the respondents said they “always get a satisfactory answer from it [Google]” (8/Table 9); this indicates that user difficulties are serious, and there is much need for user assistance. User assistance can be provided in two ways: i) a software interface to optimize Google for language reference and ii) user guidance and training.

The optimizer should build a search structure that provides an easy and effective access to Google’s functions based on users’ query situation; in other words, users should be able to easily select the right search module based on their current knowledge about their target expression and query aim and obtain the best possible results. Some details of the optimizer function are discussed below. Especially, a clear search structure will help avert the problem of wrong query selection, such as case 506 and those reported in FG survey and Yoon survey, and the problem of wrong search selection, such as cases 35, 49, and 447.

Although the strong predominance of the open search has much to do with its prominent role in information search (see subsection Types of Google use), it has a more important basis: the open search is powerful. It often delivers very helpful results without requiring much knowledge from users about the answer, as shown in subsections Candidate queries (V) and Candidate queries (EV) and further in Geiller (2014, p. 35f) and Eu (submitted). This seems to call for a new approach to GALL: the Web is not just a large corpus but a new and unique reference tool. There should be more research on the nature and current practice of candidate query (EV) and keyword queries, to develop query strategies and identify pitfalls for user guidance. Furthermore, as discussed, the optimizer should go beyond the current limitation of the open search, where helpful examples are often buried deep in cluttering data, by extracting helpful examples from open search results for a convenient result view.

Cases 35, 49, and 447, however, where an open search was used for a candidate query (V), suggest that a significant portion of the open searches represent overuse, or a mismatch between query type and search type. The follow-up discussions revealed that many users overuse open search not because they don’t know about quotation marks but because they are so used to searching without quotation marks in information search that using them just do not occur to them even when they are likely to bring better results (“I actually didn’t think of that” – respondent 447). Another important reason for the overuse is the inconvenience of the closed search: it is extra work to type in quotation marks, and with multiple candidates one has to Google them one by one and then compare the results across the separate result pages. Indeed, some respondents said they are often too “lazy” to do a closed search. Thus, especially for multiple inputs, the optimizer must simplify the process of input entry and display all results in a single view (Kazuaki et al., 2010; Milton and Cheng 2010).

User guidance should include a guide on choosing the correct query type and search type based on user’s current knowledge and aim. Furthermore, Table 8, the case reports, and the previous surveys report user difficulties in formulating search inputs. In the case reports the respondents were generally good at simplifying their input, but many of them (307, 352, 322, 447) simplified it too far, making it too short to make the best use of the large data of Google (Zengin and Kaçar 2015). In terms of the findings of Table 9, the problems of low recall (1), noise (2) and failure to find helpful examples (3, 5, 6) have much to do with bad inputs. An ideal search input preserves the context of the target sentence that is relevant to the choice of the answer and blocks noise (Zengin and Kaçar 2015, p. 336: “immediate context”), while at the same time securing sufficient recall. I believe our discussion in section ER-survey: case reports showed examples of this ideal. Hwang and Kuo (2015, p. 406) discuss the importance of inputs (“keywords”) in general information search.

Another important area of user guidance is the use of search results in finding the answer (“evaluation/application strategies”). Especially, FG and Yoon report the problems of “uncritical choice based on frequency” (see also Chang 2014, p. 254). In ER-survey respondent 322 repeated the same error by choosing the answer simply by frequency. When the answer is “context-dependent” (Table 9), it cannot be chosen by candidate frequencies but only by examining the meaning and context of each candidate. Indeed, Table 9 shows that the problem of context dependency (4) is the greatest stumbling block against a successful query. Our discussion of cases such as take guests versus bring guests (Table 14) can be used to enhance users’ ability to deal with context dependency.

Conclusion

The surveys we have discussed, designed to understand patterns of Google use in language reference, provide important signposts for pedagogical response and user support. ER-survey found the matchless popularity of Google as an English reference tool, the predominance of the open search, and various difficulties users face, in areas such as query selection, search selection, search input formulation, and search result use. This has enabled us to sketch out ways of user assistance in terms of software optimization and user guidance. It is hoped that these efforts help establish a powerful tool and method that open a new horizon in language reference and learning.

References

Bhatia, T. K., & Ritchie, W. C. (2009). Second language acquisition: Research and application in the information age. The new handbook of second language acquisition (pp. 545–565). Emerald: Bingley.

Boulton, A. (2012). What data for data-driven learning? European Association for Computer-Assisted Language Learning (EUROCALL). Northern Ireland: University of Ulster

Boulton, A. (2013). Wanted: Large corpus, simple software. No timewasters. Paper presented at the TaLC10: 10th International Conference on Teaching and Language Corpora.

Chang, J.-Y. (2014). The use of general and specialized corpora as reference sources for academic English writing: A case study. ReCALL, 26(2), 243–259.

Chinnery, G. M. (2008). You’ve got some GALL: Google-assisted language learning. Language Learning & Technology, 12(1), 3–11.

Conroy, M. A. (2010). Internet tools for language learning: University students taking control of their writing. Australasian Journal of Educational Technology, 26(6), 861–882.

Eu. (submitted). Optimizing Google as a reference tool for EFL writing.

Frankenberg-Garcia, A. (2005). A peek into what today’s language learners as researchers actually do. International Journal of Lexicography, 18(3), 335–355.

Geiller, L. (2014). How EFL students can use Google to correct their “untreatable” written errors. European Association for Computer Assisted Language Learning, 22(2), 26–45.

Hafner, C. A., & Candlin, C. N. (2007). Corpus tools as an affordance to learning in professional legal education. Journal of English for Academic Purposes, 6(4), 303–318.

Hwang, G.-J., & Kuo, F.-R. (2015). A structural equation model to analyse the antecedents to students’ web-based problem-solving performance. Australasian Journal of Educational Technology, 31(4), 400–420.

Kazuaki, A., Tsunashima, Y., & Furukawa, Y. (2010). An English and/or Japanese writing support tool based on a web search engine. International Journal of Computer Applications in Technology, 38(4), 324–332.

Kilgarriff, A., & Grefenstette, G. (2003). Introduction to the special issue on the web as corpus. Computational Linguistics, 29(3), 333–347.

Milton, J., & Cheng, V. S. (2010). A toolkit to assist L2 learners become independent writers. Proceedings of the NAACL HLT 2010 Workshop on Computational Linguistics and Writing: Writing Processes and Authoring Aids (pp. 33–41): Association for Computational Linguistics.

Panah, E., Yunus, M. M., & Embi, M. A. (2013). Google-informed patter-hunting and pattern-defining: Implication for language pedagogy. Asian Social Science, 9(3), p229.

Pérez-Paredes, P., Sánchez-Tornel, M., Alcaraz Calero, J. M., & Jiménez, P. A. (2011). Tracking learners’ actual uses of corpora: guided vs non-guided corpus consultation. Computer Assisted Language Learning, 24(3), 233–253.

Pérez-Paredes, P., Sánchez-Tornel, M., & Calero, J. M. A. (2012). Learners’ search patterns during corpus-based focus-on-form activities: A study on hands-on concordancing. International Journal of Corpus Linguistics, 17(4), 482–515.

Renouf, A., & Kehoe, A. (2013). Filling the gaps: Using the WebCorp Linguist’s Search Engine to supplement existing text resources. International Journal of Corpus Linguistics, 18(2), 167–198.

Robb, T. (2003). Google as a quick ‘n dirty corpus tool. TESL-EJ, 7(2). http://www.tesl-ej.org/wordpress/issues/volume7/ej26/ej26int/?wscr=.

Sha, G. (2010). Using Google as a super corpus to drive written language learning: A comparison with the British National Corpus. Computer Assisted Language Learning, 23(5), 377–393.

Shei, C.-C. (2008). Discovering the hidden treasure on the Internet: Using Google to uncover the veil of phraseology. Computer Assisted Language Learning, 21(1), 67–85.

Sullivan, D. (2005). Search engine sizes. Search engine watch. http://searchenginewatch.com/reports/article.php/2156481.

Sun, Y.-C. (2007). Learner perceptions of a concordancing tool for academic writing. Computer Assisted Language Learning, 20(4), 323–343.

Veronis, J. (2005). Web: Google’s missing pages: Mystery solved? Technologies du Langage. http://aixtal.blogspot.com/2005/02/web-googles-missing-pages-mystery.html.

Wallwork\, A. (2011). Using Google to reduce mistakes in your English. English for Academic Correspondence and Socializing. New York: Springer.

Wu, S., Franken, M., & Witten, I. H. (2009). Refining the use of the web (and web search) as a language teaching and learning resource. Computer Assisted Language Learning, 22(3), 249–268.

Yoon, C. (2014). Web-based concordancing and other reference resources as a problem solving tool for L2 writers: A mixed methods study of Korean ESL graduate students’ reference resource consultation. University of Toronto, Toronto.

Zengin, B., & Kaçar, I. G. (2015). Google Search Applications in Foreign Language Classes at Tertiary Level: A Case Study in the Turkish Context. Intelligent Design of Interactive Multimedia Listening Software (pp. 313-–356): IGI Global.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Eu, J. Patterns of Google use in language reference and learning: a user survey. J. Comput. Educ. 4, 419–439 (2017). https://doi.org/10.1007/s40692-017-0094-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40692-017-0094-5