Flickr’s auto-tagging feature goes awry, accidentally tags black people as apes

The site’s tool was built to help people easily identify features of pictures — but has run into problems as it learns

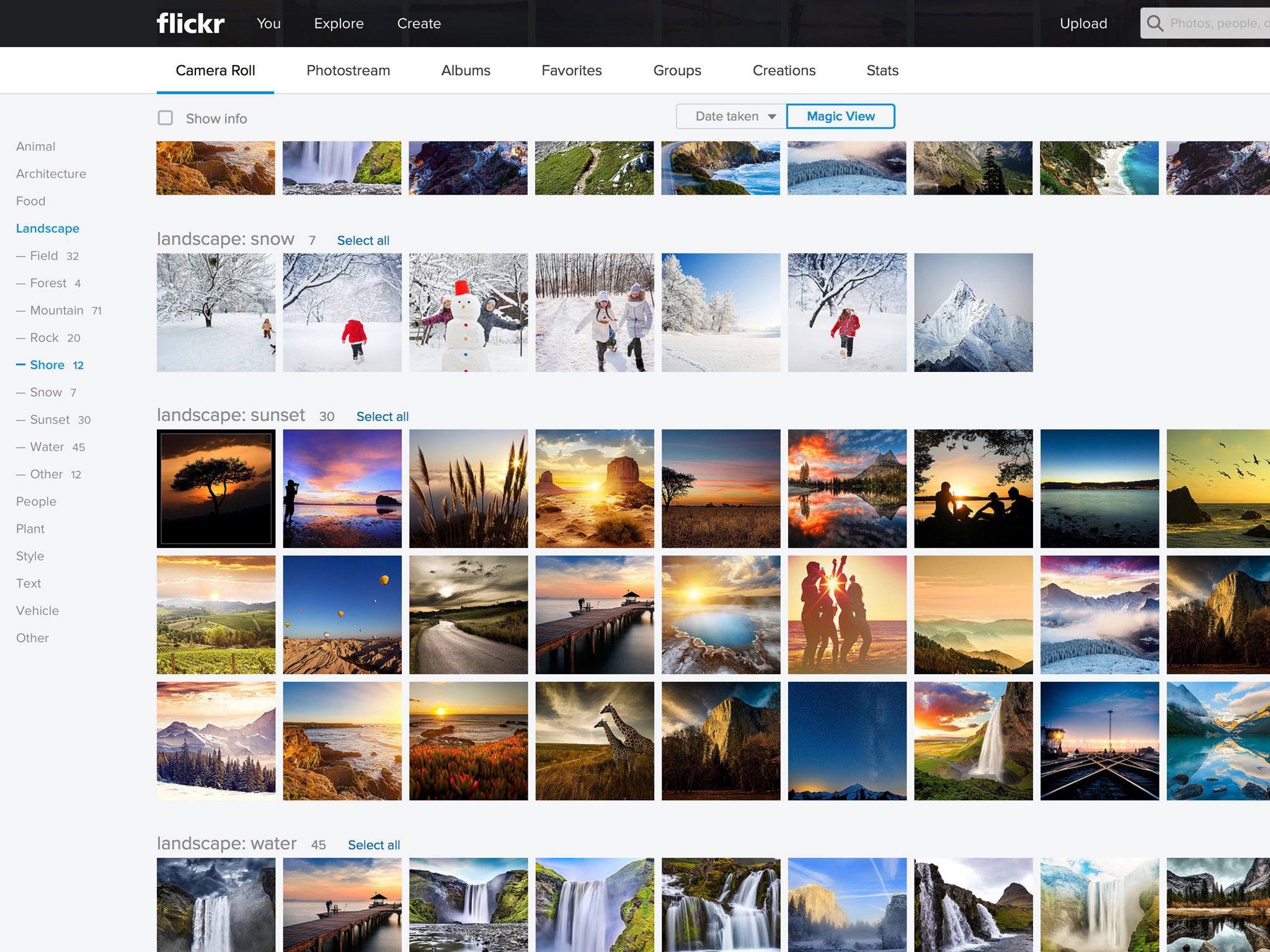

Flickr’s auto-recognition tool for sorting photos appears to be identifying people as animals and the gates of Dachau as a jungle gym.

The tool — which Flickr admits is still getting things wrong and is in the process of teaching itself — was built to automatically add tags to photos so that they could be more easily sorted through by users. But launched just days ago, it is already applying offensive tags to some photos.

It appeared to have identified one picture of a black man with the tags "ape" and "animal". Though the racist implications were obvious, it has also identified a white women with the same tag.

It picked other sensitive images such as a photo of a gate at Dachau, which it thought was a "jungle gym".

Clicking on any of the tags shows that most of the auto-tags are correct. But those offensive ones generated anger on Twitter, with comments on the "ape" picture saying "OMG! APE?" and "this auto tagging is really bonkers... shame on flickr".

Flickr told the Guardian that it was "aware of issues with inaccurate auto-tags on Flickr and are working on a fix".

"While we are very proud of this advanced image-recognition technology, we’re the first to admit there will be mistakes and we are constantly working to improve the experience," a spokesperson said. "If you delete an incorrect tag, our algorithm learns from that mistake and will perform better in the future. The tagging process is completely automated – no human will ever view your photos to tag them”.

The tags are also identified as being generated by Flickr's robots by being a different colour. Tags added by people have a grey background, while automatically generated tags have a white one.

That means that while the feature occasionally identifies things wrongly, it usually does so in an understandable way — seeing a motorbike as a bicycle, for instance. But the same mechanisms that in place to teach Flickr to differentiate between less offensive mistakes should also step in to remove the problematic ones, as more people tell the site that those tags are wrong.

Subscribe to Independent Premium to bookmark this article

Want to bookmark your favourite articles and stories to read or reference later? Start your Independent Premium subscription today.

Join our commenting forum

Join thought-provoking conversations, follow other Independent readers and see their replies