Skype’s Gone Multilingual

Katrina Rippel is a careful speaker who follows all the rules. Hao Chen is a more freewheeling conversationalist. And I’m a nonstop troublemaker, constantly blurting out whatever notions pass through my head. On a recent morning, the three of us met in cyberspace to find out how well (or poorly) we could communicate in a mixture of German, Mandarin, and English. Each of us spoke only our native language.

Donning headphones, we tapped into Skype Translator, a creation of Microsoft’s research team. (My chatting partners were part-time consultants to Microsoft, who happened to be thousands of miles away from my West Coast U.S. base.) When I asked Chen where he grew up, it didn’t faze me to hear him say: 我在中国的家乡在东北,辽宁省,鞍山市. A few seconds later, a friendly synthetic voice told me: “My hometown is in the northeast in China, Liaoning Province, Anshan.”

If only the rest of our exchanges had worked so smoothly. When Chen tried to explain his U.S. travels, Skype mishandled an ambiguous Mandarin noun, telling me he had journeyed to “the cadre of New York.” Only when Chen tried different phrasing was Skype willing to make it “the state of New York.” When I asked Rippel about her German hometown, Skype’s software, expecting me to speak English, not German, heard me say “dressed” instead of “Dresden.” So it created a gibberish sentence, in which the German word bekleidet appeared instead of her city name.

As such stumbles show, machine-based translation of everyday speech isn’t there yet, despite 30 years of trying. It’s our own fault, really. If we spoke with the clarity and precision of United Nations diplomats, then artificial-intelligence tools could decode everything according to well-established patterns. The more we rely on uncharted words or syntax to get our thoughts across, the harder it is for translation software to get everything right without extra help.

Even so, Microsoft, Google, Baidu, Facebook, IBM, and many others are vying for supremacy in this difficult field. Offering top-grade voice recognition and translation can become an attractive calling card that helps lock in customers for many other services. These range from Internet search to cloud computing, in which data storage and processing are provided via remote servers and an Internet connection.

Worldwide cloud and infrastructure spending topped $115 billion last year and is growing at a 28 percent annual rate, according to Synergy Research. Real-time translation can help competitors’ overall suites of cloud services stand out in what otherwise becomes a price-driven commodity business. For now, most translation services are available free of charge, but paid alternatives may emerge as global enterprises seek customized translation tools that work even better.

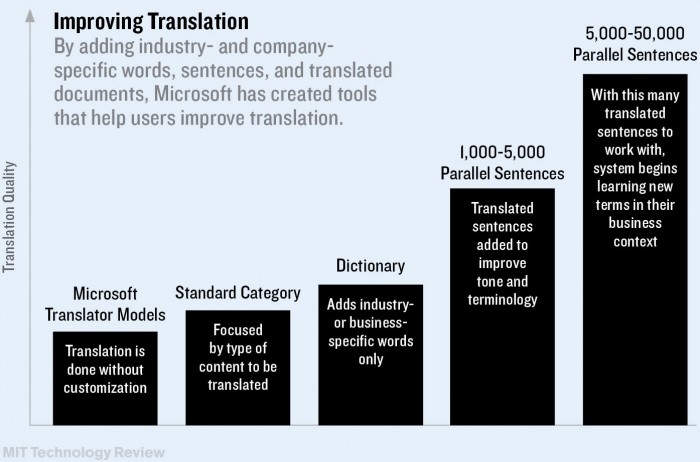

Microsoft, in particular, is exploring ways that corporate users can build greater capabilities atop the basic Skype Translator engine that Rippel, Chen, and I tested. One area of interest: helping customers load in thousands of specialized terms, reference documents, sample conversations, and quirky locutions ahead of time. That way, “Dresden”-type problems should be much less likely to occur.

Translation software works much better if it can tap into a hefty database of language patterns that particular speakers are likely to use, explains Microsoft research strategy director Vikram Dendi. Management consultants may use terms like “delta” and “granularity” in contexts that seem unthinkable to the rest of us. Industrial chemists may banter about more than a dozen different kinds of phthalates. And inside any big company, nicknames for projects, processes, and top executives are endless.

Offering top-grade voice recognition and translation can help lock in customers for many other services, ranging from Internet search to cloud computing.

Since 2011, Microsoft has allowed big customers to load their own glossaries or written materials into specialized text-translation databases. That’s meant to produce more reliable results than Microsoft’s basic Bing service provides, especially on dense technical material. More than 100,000 users have opted for customization, Dendi says. Light users may pay as little as $40 a month; heavy users such as Adobe and Twitter may pay far more.

Microsoft has tried a wide range of strategies to crack translation since the mid-1990s, when company founder Bill Gates predicted that speech recognition would be widely available within 10 years. Early approaches relied heavily on attempts to catalogue specific rules of grammar and usage. Starting in 2009, Microsoft broadened its emphasis. Statistical techniques have been paired with neural networks, a machine-learning system based on the structure and self-teaching nature of the human brain.

Currently, Microsoft uses five layers of neural nets to analyze speech, according to Peter Lee, head of the company’s research division. The lowest layers analyze sounds on a level that’s as rudimentary as the way image-analyzing software looks for edges and surfaces, without making any attempt to figure out what objects might be. As with many advanced machine-intelligence approaches, there’s some mystery to how it works, even to the researchers involved. “It has nothing to do with words or phonemes,” Lee says, referring to the sounds that distinguish one word from another. “I don’t think any of us understand exactly what the bottom layer is looking at. But it works surprisingly well.”

Microsoft’s researchers have also been making greater use of what’s known as “long short-term memory.” When recognizing speech or translating, neural nets make a series of guesses that keep getting revised as new information comes in. Occasionally, an expected pattern suddenly falters. In such cases, neural nets can do a better job of regrouping if they can revisit the assumptions that led to several words’ worth of guesses. Keeping a longer trail in the system’s short-term memory makes such retracing and subsequent corrections possible.

It takes at least 4,000 hours of spoken samples—and millions of words of text—to train Skype Translator’s neural nets in each new language. Arul Menezes, head of Microsoft’s machine-translation team, says he had expected difficulties in languages such as Arabic, where speakers’ accents can vary widely. But by collecting samples of enough different speakers’ voices, Menezes says, it’s been possible to develop Skype Translator’s “ear” for different intonations to the point that regional accents aren’t a problem. The same applies to the differences between male and female voices.

Other variations in everyday speech turn out to be trickier. The neural nets are exquisitely sensitive to differences in microphones. (Humans may be good at detecting the difference between static and speech, but that’s much harder for machines to master.) Pauses in speech are problematic, too. As Menezes notes, “People generally don’t pause at the end of a sentence. They pause elsewhere. Pauses end up being useless in detecting when a sentence begins or ends. You have to go by the words themselves.”

Sorting out the right translations for ambiguous words is a never-ending challenge, too, Menezes acknowledges. While speaking German, Rippel frequently uses the word Sie, which can mean she, you, or they, depending on the situation. Skype Translator gets it right about 80 percent of the time.

Similarly, Skype Translator stumbles slightly when Chen discusses family sizes in China. Regardless of government policies, Chen tells me, the sheer cost of parenting in China means that “a lot of people only want to give birth to a child.”

A few minutes later, sitting in Building 99 of Microsoft’s headquarters, Menezes and I review a transcript of the conversation. Menezes ruefully points to the child-raising exchange. “That should be ‘one child,’” he says. “But in Chinese, there’s no distinction between ‘one’ and ‘a.’ There’s a difference in English, but it has to be picked up entirely in context.”

“I don’t think professional translators are quaking in their boots yet at what we’re doing,” he adds, with a thin smile. “Their jobs are safe for quite a while.”

Rippel, a professional translator, isn’t nearly so critical. As long as users speak slowly and keep sentences short, she says, automated services such as Skype Translator can do a useful job of overcoming language barriers.

“It’s very important that this tool exists,” she says. “In current times, it’s more important than ever for people in all communities to be able to talk to one another.”

Keep Reading

Most Popular

Large language models can do jaw-dropping things. But nobody knows exactly why.

And that's a problem. Figuring it out is one of the biggest scientific puzzles of our time and a crucial step towards controlling more powerful future models.

The problem with plug-in hybrids? Their drivers.

Plug-in hybrids are often sold as a transition to EVs, but new data from Europe shows we’re still underestimating the emissions they produce.

Google DeepMind’s new generative model makes Super Mario–like games from scratch

Genie learns how to control games by watching hours and hours of video. It could help train next-gen robots too.

How scientists traced a mysterious covid case back to six toilets

When wastewater surveillance turns into a hunt for a single infected individual, the ethics get tricky.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.