What Your Facebook Posts Say About Your Mental Health

Psychologists are discovering just how much information about our inner states can be gleaned from social media.

For some people, posting to social media is as automatic as breathing. At lunchtime, you might pop off about the latest salad offering at your local lettucery. Or, late that night, you might tweet, “I can’t sleep, so I think I’m just going to have a glass of wine” without a second thought.

Over time, all these Facebook posts, Instagram captions, and tweets have become a treasure trove of human thought and feeling. People might rarely look back on their dashed-off online thoughts, but if their posts are publicly accessible, they’re ripe for analysis. And some psychologists are using algorithms to figure out what exactly it is we mean by these supposedly off-the-cuff pronouncements.

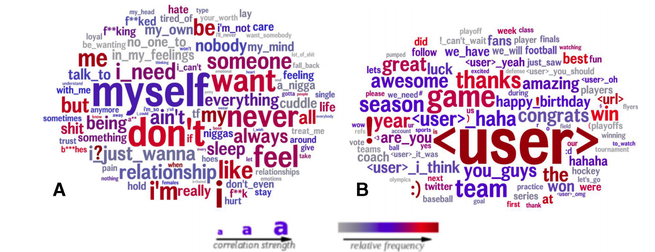

According to new research, for instance, a tweet like “I’m up at 2 a.m. drinking wine by myself” says one thing pretty clearly: “I’m lonely.” For a study in BMJ Open, researchers at the University of Pennsylvania analyzed 400 million tweets posted by people in Pennsylvania from 2012 to 2016. The authors scraped together the Twitter posts of users whose tweets contained at least five mentions of the words lonely or alone, and compared them with a control group with similar demographics. (The authors did not explicitly ask those who often tweeted about loneliness whether they actually were lonely.)

The seemingly lonely people swore more, and talked more about their relationship problems and their needs and feelings. They were more likely to express anxiety or anger, and to refer to drugs and alcohol. They complained of difficulty sleeping and often posted at night. The non-lonely control group, perhaps fittingly, began a lot more conversations by mentioning another person’s username. They also posted more about sports games, teams, and things being “awesome.”

This study was far from a perfect window into Twitter users’ souls. Certainly, people can talk about their needs and feelings without being lonely. But natural-language processing is nevertheless making it easier for scientists to understand what different emotions look like online. In recent years, researchers have used social-media data to predict which users are depressed and which are especially happy. As the tools for analysis become more sophisticated, a wide array of emotions and mental-health conditions can now be predicted using the words that people are already typing into their phones and computers every day.

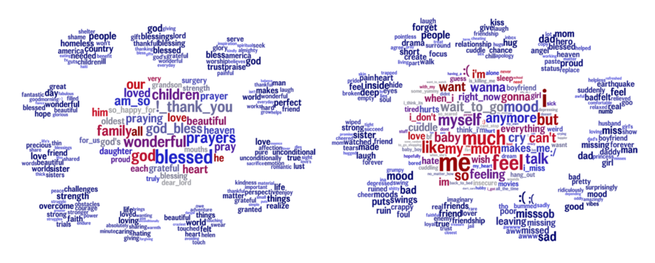

In some cases, researchers can unearth fine-grained differences within amorphous emotions. Take, for instance, empathy. There’s long been an idea in psychology that there are two types of empathy: “Beneficial” empathy, or compassion, involves sympathizing with someone and trying to help that person. Meanwhile, “depleting” empathy entails feeling someone’s actual pain—and suffering yourself in the process. For a paper that is still undergoing peer review, another group of researchers at the University of Pennsylvania analyzed social-media language to determine how these two types of empathy are expressed. They found that people who demonstrate compassion tend to say things like “blessed,” “wonderful,” “prayers,” or “family.” Those who express depleting empathy use words like “me,” “feel,” “myself,” and “anymore.”

This might seem like a minor distinction, but according to Lyle Ungar, one of the study’s authors, finding the difference between the two can help people in jobs that involve caring for others, such as doctors, understand when their empathy might be counter-productive. Depleting empathy can lead to burnout. “I can really care about you and not suffer with you,” Ungar says. “I can worry that there’s poverty in Africa and donate money to charity without feeling what it’s like to have malaria.”

Beyond common emotions, language-analysis technology might also shed light on more serious conditions. It might one day be used to predict psychosis in patients with bipolar disorder or schizophrenia. Episodes of psychosis, or losing touch with reality, can be shortened or even stopped if caught early enough, but many patients are too far gone by the time loved ones realize what’s happening. And it’s difficult for people going through psychosis to realize they’re in the midst of it.

Last month, researchers from the Feinstein Institutes for Medical Research and the Georgia Institute of Technology analyzed 52,815 Facebook posts from 51 patients who had recently experienced psychosis. They found that the language the patients used on Facebook was significantly different in the month preceding their psychotic relapse, compared with when they were healthy. As their symptoms grew worse, they were more likely to swear, or to use words related to anger or death, and they were less likely to use words associated with work, friends, or health. They also used first-person pronouns, a possible sign of what’s called “self-referential thinking,” the study authors write, or the tendency for people who are experiencing delusions to falsely think that strangers are talking about them. (In the recent loneliness study, the lonely Twitter users were also more likely to use the words myself or I than the control group.)

Those experiencing psychosis more frequently “friended” and tagged others on Facebook in the month before their relapse. It’s not so much that making new friends on Facebook is problematic, says Michael Birnbaum, an assistant behavior-science professor at the Feinstein Institutes for Medical Research and lead author on the study. It’s that the increased activity reflects a shift in behavior in general—which could be a sign of an upcoming psychotic break. “It’s something that they wouldn’t typically do when they were in a period of relative health,” Birnbaum says.

The researchers behind such studies say that eventually, these types of analyses could help flag people who are lonely or suffering, even if they can’t or won’t visit a doctor. “Loneliness is sort of a pathway to depression,” says Sharath Chandra Guntuku, a research scientist at the Center for Digital Health at the University of Pennsylvania, and the lead author on the loneliness study, “so we wanted to see if we can look at loneliness instead of letting it progress all the way to depression.” Eventually, people feeling lonely or showing other signs of mental distress could be served a chat screen with a real person to talk to, for instance, or given suggestions for some meet-up groups in their area.

What’s still unclear is if any of these findings can be used to get real, psychiatric help to patients in real time. Paul Appelbaum, a professor of and an expert on psychiatry ethics at Columbia University—and the father of one of my colleagues—says it’s an “open secret” that clinicians in psychiatric emergency rooms will look up patients online if they have concerns about their potential to harm themselves or others, especially if the patient isn’t very forthcoming. But that process consists essentially of one-off Facebook stalking, not an in-depth linguistic analysis.

Now research is being done on the passive monitoring of patients, Appelbaum says. A smartphone could be used to remotely track changes in someone’s speech or movement. People in the throes of mania, for example, often talk more quickly, and they sometimes roam about at all hours of the night. Conversely, depressed people sometimes stay too still, planting in bed or on the couch for days. “There are also many apps that have been developed that involve input from the patient: information about mood or thoughts or behavior, which can be monitored remotely for changes in their status,” Appelbaum says.

Birnbaum imagines a future in which his severely mentally ill patients might give him access to their digital footprint—including wearables, Facebook, and wherever else they live their lives online—so that he and his team could intervene if they begin to see signs of a psychotic relapse. “We know the earlier we intervene, the better the outcome,” Birnbaum says.

Anything involving doctors prying into their patients’ online lives could be done only with patient consent, most experts agree. Real, unresolved issues will come up if any of this ever comes to fruition, such as what happens if a patient revokes consent during a psychotic relapse, or how much social-media evidence can be weighed in hospitalization decisions, or even whether social media, with its jokes and memes and grandstanding, is a good mirror for anyone’s real mental state at all. Long before any of this happens, Birnbaum says, organizations such as the American Psychiatric Association should determine what the ethics and best practices are around monitoring patients’ social-media posts, so that patients’ rights can be protected even as their health is safeguarded.

That likely won’t be necessary for many years. Several experts I spoke with said they don’t know of any psychologists who monitor patients’ social-media data in a systematic way. And no one algorithm currently works well enough to accurately predict mental-health issues for an entire practice’s worth of patients based on social-media posts alone. (What would a hypothetical intervention even look like? A midnight drop-by with a sedative?)

One particularly cringeworthy example came in 2014, when an app made by a British charity meant to predict suicide risk from the use of phrases such as help me on Twitter was swiftly pulled after it was overrun by trolls and false positives (for example, “Someone help me understand why the bus is always late”). This type of technology, while promising, says Mike Conway, a professor at the University of Utah School of Medicine, “is not ready for prime time.”

The day that it is, our doctors might deduce a lot about our mental states from our random updates. And so will we. In fact, the most important beneficiary of this kind of technology could be the average social-media user. It’s rare that in any given tweet you’d admit, “I’m lonely.” Instead, looking at all of your online missives over time could provide clues to your deepest subconscious urges, like a modern-day Rorschach painted with your own words.