The rise of powerful artificial intelligence, professor Stephen Hawking once warned, will be "either the best or the worst thing ever to happen to humanity." Unfortunately, by the time we find out which, it may already be too late—but a new video game simulation may offer clues as to what we might expect.

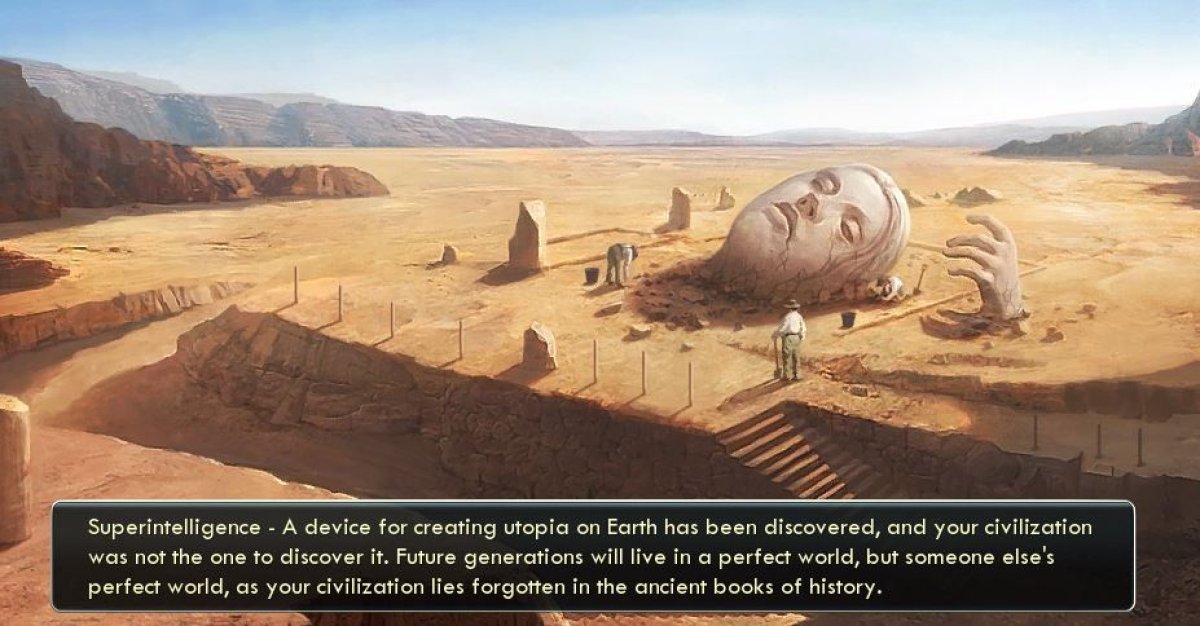

Scientists at the University of Cambridge's Centre for the Study of Existential Risk have built a "Superintelligence" modification for the classic strategy game Civilization 5 that envisions a scenario in which a smarter-than-human AI is introduced to society.

One outcome offers a dire prophecy of the demise of humanity, achieving the game's aim of helping players better understand the existential threat posed by advanced artificial intelligence.

"Artificial intelligence can initially provide some benefits, and eventually can turn into superintelligence that brings mastery of science to its discoverer," the researchers write in the add-on's description.

"However, if too much artificial intelligence research goes uncontrolled, rogue superintelligence can destroy humanity and bring an instant loss of the game."

In this scenario, a message appears in the game that reads: "A device for creating utopia on Earth has been discovered, and your civilization was not the one to discover it. Future generations will live in a perfect world, but someone else's perfect world, as your civilization lies forgotten in the ancient books of history."

It is not the first video game to imagine the detrimental consequences of a rogue AI, with a game reenacting a well-known AI thought experiment going viral in October last year.

The game Paperclips explored the parable of an AI programmed to manufacture paperclips, first described by the philosopher Nick Bostrom in a 2003 paper exploring ethical issues in advanced artificial intelligence.

While the goal of the AI is simple, if it is not programmed to value human life then it could eventually gather all matter in the universe—including human beings—in order to create more paperclips.

Both games highlight the fear that a machine smarter than humans will be impossible to switch off. At a conference in 2015, Bostrom hypothesized why neanderthals didn't wipe out humans when they had the chance to avoid being taken over as the dominant species on the planet.

"They certainly had reasons," Bostrom said in his TED (technology, entertainment and design) talk. "The reason is that we are an intelligent adversary. We can anticipate threats and plan around them. But so could a super intelligent agent and it would be much better at that than we are."

Other academics are already working on safeguards and ways to preclude such risk, most notably Google's DeepMind—headed by another video game simulation pioneer, Demis Hassabis. In a peer-reviewed paper in 2016, researchers from DeepMind described an off switch that would override any previous commands and shut down the AI and prevent any potential apocalyptic scenarios.

Read more: Google's 'Big Red Button' could save the world

Dire warnings about advanced AI have become increasingly common in recent years within academic and tech circles, with Hawking one of the most outspoken voices.

"Success in creating AI could be the biggest event in the history of our civilization," Hawking said at an event in Cambridge in 2016. "But it could also be the last—unless we learn how to avoid the risks. Alongside the benefits, AI will also bring dangers like powerful autonomous weapons or new ways for the few to oppress the many."

The serially successful Elon Musk—whose ventures include the non-profit AI research company OpenAI—warned in 2014 that AI is "potentially more dangerous than nukes," and in 2017 joined Hawking in pledging support for principles that will protect mankind from machines and ultimately offer hope for the future in this new technological era.

"Artificial intelligence has already provided beneficial tools that are used every day by people around the world," an open letter explaining the principles states.

"Its continued development, guided by the following principles, will offer amazing opportunities to help and empower people in the decades and centuries ahead."

Uncommon Knowledge

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

Newsweek is committed to challenging conventional wisdom and finding connections in the search for common ground.

About the writer

Anthony Cuthbertson is a staff writer at Newsweek, based in London.

Anthony's awards include Digital Writer of the Year (Online ... Read more

To read how Newsweek uses AI as a newsroom tool, Click here.