Data journalism is huge. I don't mean 'huge' as in fashionable - although it has become that in recent months - but 'huge' as in 'incomprehensibly enormous'. It represents the convergence of a number of fields which are significant in their own right - from investigative research and statistics to design and programming. The idea of combining those skills to tell important stories is powerful - but also intimidating. Who can do all that?

The reality is that almost no one is doing all of that, but there are enough different parts of the puzzle for people to easily get involved in, and go from there. To me, those parts come down to four things:

1. Finding data

'Finding data' can involve anything from having expert knowledge and contacts to being able to use computer assisted reporting skills or, for some, specific technical skills such as MySQL or Python to gather the data for you.

2. Interrogating data

Interrogating data well means you need to have a good understanding of jargon and the wider context within which data sits, plus statistics - a familiarity with spreadsheets can help save a lot of time.

3. Visualising data

Visualising and mashing data has historically been the responsibility of designers and coders, but an increasing number of people with editorial backgrounds are trying their hand at both - partly because of a widening awareness of what is possible, and partly because of a lowering of the barriers to experimenting with them.

4. Mashing data

Tools such as ManyEyes for visualisation, and Yahoo! Pipes for mashups, have made it possible for me to get journalism students stuck in quickly with the possibilities - and many catch the data journalism bug soon after.

How to begin?

So where does a budding data journalist start? An obvious answer would be "with the data" - but there's a second answer too: "With a question".

Journalists have to balance their role in responding to events with their role as an active seeker of stories - and data is no different. The New York Times' Aron Pilhofer recommends that you "Start small, and start with something you already know and already do. And always, always, always remember that the goal here is journalism." The Guardian's Charles Arthur suggests "Find a story that will be best told through numbers", while The Times' Jonathan Richards and The Telegraph's Conrad Quilty-Harper both recommend finding your feet and coming up with ideas by following blogs in the field and attending meetups such as Hacks/Hackers.

There is no shortage of data being released that you can get your journalistic teeth into. The open data movement in the UK and internationally is seeing a continual release of newsworthy data, and it's relatively easy to find datasets being released by regulators, consumer groups, charities, scientific institutions and businesses. You can also monitor the responses to Freedom of Information requests on What Do They Know, and on organisations' own disclosure logs. And of course, there's the Guardian's own datablog.

A second approach, however, is to start with a question - "Do speed cameras cost or save money?" for example, was one topical question that was recently asked on Help Me Investigate, the crowdsourcing investigative journalism site that I run - and then to search for the data that might answer it (so far that has come from a government review and a DfT report). Submitting a Freedom of Information request is a useful avenue too (make sure you ask for the data in CSV or similar format).

Whichever approach you take, it's likely that the real work will lie in finding the further bits of information and data to fill out the picture you're trying to clarify. Government data, for example, will often come littered with jargon and codes you'll need to understand. A call to the relevant organisation can shed some light. If that's taking too long, an advanced search for one of the more obscure codes can help too - limiting your search, for example, by including site:gov.uk filetype:pdf (or equivalent limitations for your particular search) at the end.

You'll also need to contextualise the initial data with further data. Say you have some information about a government department's changing wage bill, for example: has the department workforce expanded? How does it compare to other government departments? What about wider wages within the industry? What about inflation and changes in the cost of living? This context can make a difference between missing and spotting a story.

Quite often your data will need cleaning up: look out for different names for the same thing, spelling and punctuation errors, poorly formatted fields (e.g. dates that are formatted as text), incorrectly entered data and information that is missing entirely. Tools like Freebase Gridworks can help here.

At other times the dataset you need will come in an inconvenient format, such as a PDF, Powerpoint, or a rather ugly webpage. If you're lucky, you may be able to copy and paste the data into a spreadsheet. But you won't always be lucky.

At these moments some programming knowledge comes in handy. There's a sliding scale here: at one end are those who can write scripts from scratch that scrape a webpage and store the information in a spreadsheet. Alternatively, you can use a website like Scraperwiki which already has example scripts that you can customise to your ends - and a community to help. Then there are online tools like Yahoo! Pipes and the Firefox plugin OutWit Hub. If the data is in a HTML table you can even write a one-line formula in Google Spreadsheets to pull it in. Failing all the above, you might just have to record it by hand - but whatever you do, make sure you publish your spreadsheet online and blog about it so others don't have to repeat your hard work.

Once you have the data you need to tell the story, you need to get it ready to visualise. Trim off everything peripheral to what you need in order to visualise your story. There are dozens of free online tools you can use to do this. ManyEyes and Tableau Public are good places to start for charts. This poster by A. Abela (PDF) is a good guide to what charts work best for different types of data.

Play around. If you're good with a graphics package, try making the visualisation clearer through colour and labelling. And always include a piece of text giving a link to the data and its source - because infographics tend to become separated from their original context as they make their way around the web.

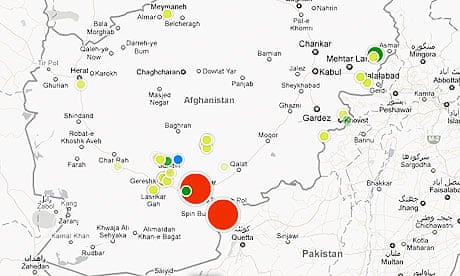

For maps, the wonderful OpenHeatMap is very easy to use - as long as your data is categorised by country, local authority, constituency, region or county. Or you can use Yahoo! Pipes to map the points of interest. Both of these are actually examples of mashups, which is useful if you like the word "mashups" and want to use it at parties. There are other tools too, but if you want to get serious about mashing up, you will need to explore the world of programming and APIs. At that point you may sit back and think: "Data journalism is huge."

And you know what? I said that once.

Paul Bradshaw is founder, Help Me Investigate and Reader in Online Journalism, Birmingham City University and teaches at City University in London. He publishes the Online journalism blog